I doubt I am the only person crazy enough to attempt this… TBH, I dare not look. It was fun.

My 10 year old son showed an interest in building a Flappy Bird, albeit in Scratch and with different characters other than the bird. For encouragement, I started compiling the relevant images and got this crazy idea I’d have a go in C# first. A few hours later the basic game was in place (user controlled).

I’ll ‘fess up that I was never interested in the game, so if you don’t find it authentic, please don’t scream at me. Also because I am not a fan, I didn’t do the gold/silver/bronze medal thing with hi-score. If you want to complete it, please feel free to modify the GitHub code.

After proving the concept and finding it irritating to get the bird to miss the pipes I thought I would hook up a neural network to control the bird.

If you want to play in a user “test” harness and feel equally frustrated, go to GitHub.

The ML approach was fun and worked the same evening! Many hours were spent afterwards adding what I consider cool diagnostics/progress.

If you’re interested in the UI around making a flappy game, I’ve added it here. I’ve settled into a style where graphics are moved out of the main blog. I hope folks prefer that, if not please let me know in the comments.

If we break down the game, we have:

- scenery, the fact it scrolls is quite irrelevant as it doesn’t affect the game

- random pipes that move right to left

- we need to add random pipes as we go along or compute them upfront; increasing difficulty by reducing the gap between pipes

- gravity pulls Flappy down unless something pushes it up

- collision detection with pipe

If we ignore the idea of reinforcement learning we have a simple algorithm:-

- Wherever the bird is, find the “gap” and head towards it.

- To drop, don’t flap; to go up, flap like your life depended on not splatting into a pipe.

- Ideally, plan ahead.

The skill when users play it, is pressing the “flap” button frequent enough whilst being aware it accelerates up and pressing it too much means the bird takes a while to plummet, invariably causing a collision with a pipe.

If you want to see the ML Flappy in all its glory, go to GitHub

We’re not going to cover the “user” test harness, as this blog is about AI/ML but the two apps share much in common apart from the cool ML features, and the controls.

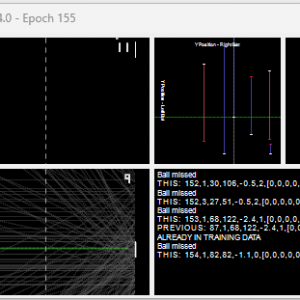

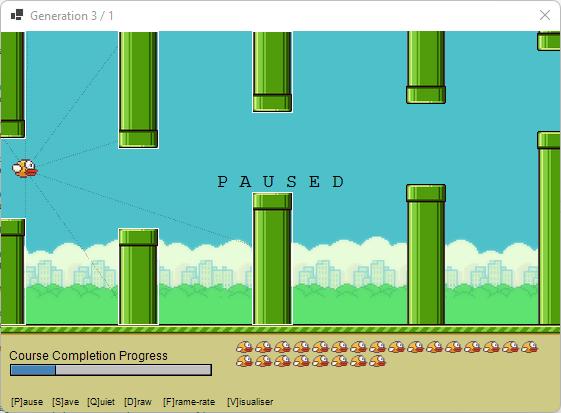

A lot is going on, and just so you believe me, here’s Flappy and its brains exposed. (Sorry, for the large GIF).

Did that pique your interest?

Flappy Pipe Detection Sensor

I’m afraid Flappy isn’t using magic, it uses sensors (illustrated below). You may have noticed that in the above that things are moving in its brain? I know, “moving neurons”, I’ve invented something nature hasn’t thought of. The right-hand neurons are the “sensors” and they are intentionally rendered respective to the pipes sensed.

The whole idea of visualising a brain in action appeals to me. Flappy’s live brain dump isn’t perfect but an attempt to help understand what it’s doing. The thicker the line, the bigger the weighting; colour tells you the sign of the weighting. The neurons are coloured based on their output.

I don’t like that a lot of my apps (driving, missiles, sheep, this) require sensors like this. But it is efficient.

While my tribute to Pong reads the whole screen, it’s possible as there are no distractions (just a ball). If I were to make the ML see the whole screen I would paint a silhouette containing pipes floor and ceiling. Or cheating, filled boxes where flappy is allowed to head. It would require a lot of neurons (170495 inputs, 1 output, and more than 170495 hidden neurons possibly several layers). Sure the big players have a billion parameters, I don’t think plain old C# without GPU is going to cut it.

When Read() is called, it is given the pipes in the immediate vicinity as well as where Flappy is on the screen. If you notice the white rectangles around the pipes above, they are the bounding box for the pipes. Flappy checks to see whether the sensor intersects with the bounding box of the pipe (line by line). If it does intersect from Flappy to the pipe distance is returned.

using System.Drawing.Drawing2D;

using FlappyBirdAI.Utils;

namespace FlappyBirdAI.Sensor;

/// <summary>

/// ___ _ ____

/// / _ \(_)__ ___ / __/__ ___ ___ ___ ____

/// / ___/ / _ \/ -_)\ \/ -_) _ \(_-</ _ \/ __/

/// /_/ /_/ .__/\__/___/\__/_//_/___/\___/_/

/// /_/

/// </summary>

internal class PipeSensor

{

/// <summary>

/// Stores the "lines" that contain pipe.

/// </summary>

private readonly List<PointF[]> wallSensorTriangleTargetIsInPolygonsInDeviceCoordinates = new();

/// <summary>

/// How many sample points it radiates to detect a pipe.

/// </summary>

internal static int s_samplePoints = 7;

// e.g

// input to the neural network

// _ \ | / _

// 0 1 2 3 4

//

internal static double s_fieldOfVisionStartInDegrees = -125;

// _ \ | / _

// 0 1 2 3 4

// [-] this

internal static double s_sensorVisionAngleInDegrees = 0;

/// <summary>

/// Detects pipes using a sensor.

/// </summary>

/// <param name="flappyLocation"></param>

/// <param name="heatSensorRegionsOutput"></param>

/// <returns></returns>

internal double[] Read(List<Rectangle> pipesOnScreen, PointF flappyLocation, out double[] heatSensorRegionsOutput)

{

wallSensorTriangleTargetIsInPolygonsInDeviceCoordinates.Clear();

heatSensorRegionsOutput = new double[s_samplePoints];

// _ \ | / _

// 0 1 2 3 4

// [-] this

s_sensorVisionAngleInDegrees = (float)Math.Abs(s_fieldOfVisionStartInDegrees * 2f - 1) / s_samplePoints;

// _ \ | / _

// 0 1 2 3 4

// ^ this

double sensorAngleToCheckInDegrees = s_fieldOfVisionStartInDegrees;

// how far it looks for a pipe

double DepthOfVisionInPixels = 300;

for (int LIDARangleIndex = 0; LIDARangleIndex < s_samplePoints; LIDARangleIndex++)

{

// -45 0 45

// -90 _ \ | / _ 90 <-- relative to direction of flappy

double LIDARangleToCheckInRadiansMin = MathUtils.DegreesInRadians(sensorAngleToCheckInDegrees);

PointF pointSensor = new((float)(Math.Cos(LIDARangleToCheckInRadiansMin) * DepthOfVisionInPixels + flappyLocation.X),

(float)(Math.Sin(LIDARangleToCheckInRadiansMin) * DepthOfVisionInPixels + flappyLocation.Y));

heatSensorRegionsOutput[LIDARangleIndex] = 1; // no target in this direction

PointF intersection = new();

// check each "target" rectangle (marking the border of a pipe) and see if it intersects with the sensor.

foreach (Rectangle boundingBoxAroundPipe in pipesOnScreen)

{

// rectangle is bounding box for the pipe

Point topRight = new(boundingBoxAroundPipe.Right, boundingBoxAroundPipe.Top);

Point topLeft = new(boundingBoxAroundPipe.Left, boundingBoxAroundPipe.Top);

Point bottomLeft = new(boundingBoxAroundPipe.Left, boundingBoxAroundPipe.Bottom);

Point bottomRight = new(boundingBoxAroundPipe.Right, boundingBoxAroundPipe.Bottom);

// check left side of pipe

FindIntersectionWithPipe(flappyLocation, heatSensorRegionsOutput, DepthOfVisionInPixels, LIDARangleIndex, pointSensor, ref intersection, topLeft, bottomLeft);

// check the bottom side of pipe (pipes coming out of ceiling)

FindIntersectionWithPipe(flappyLocation, heatSensorRegionsOutput, DepthOfVisionInPixels, LIDARangleIndex, pointSensor, ref intersection, bottomLeft, bottomRight);

// check the top of pipe (pipes coming out floor)

FindIntersectionWithPipe(flappyLocation, heatSensorRegionsOutput, DepthOfVisionInPixels, LIDARangleIndex, pointSensor, ref intersection, topLeft, topRight);

// we check right side, even though we're looking forwards because some proximity sensors point backwards

FindIntersectionWithPipe(flappyLocation, heatSensorRegionsOutput, DepthOfVisionInPixels, LIDARangleIndex, pointSensor, ref intersection, topRight, bottomRight);

}

// detect where the floor is

FindIntersectionWithPipe(flappyLocation, heatSensorRegionsOutput, DepthOfVisionInPixels, LIDARangleIndex, pointSensor, ref intersection, new(0, 294), new(800, 294));

// check where the ceiling is

FindIntersectionWithPipe(flappyLocation, heatSensorRegionsOutput, DepthOfVisionInPixels, LIDARangleIndex, pointSensor, ref intersection, new(0, 0), new(800, 0));

heatSensorRegionsOutput[LIDARangleIndex] = 1 - heatSensorRegionsOutput[LIDARangleIndex];

// only add to the sensor visual if we have detected something

if (heatSensorRegionsOutput[LIDARangleIndex] > 0 && (intersection.X != 0 || intersection.Y != 0)) wallSensorTriangleTargetIsInPolygonsInDeviceCoordinates.Add(new PointF[] { flappyLocation, intersection });

// _ \ | / _ _ \ | / _

// 0 1 2 3 4 0 1 2 3 4

// [-] from this [-] to this

sensorAngleToCheckInDegrees += s_sensorVisionAngleInDegrees;

}

return heatSensorRegionsOutput;

}

/// <summary>

/// Detect intersection between line to sensor limit and the pipe returning "intersection" where they meet.

/// </summary>

private static void FindIntersectionWithPipe(PointF flappyLocation, double[] heatSensorRegionsOutput, double DepthOfVisionInPixels, int LIDARangleIndex, PointF pointSensorLimit, ref PointF intersection, Point pointPipeLine1, Point pointPipeLine2)

{

/* _______________________

* . | | | | | |

* . .==== | | left> | |

* . . | | | |

* (<)O.....==== | | ====

* . . . | | | | ^ bottom

* . . | | ====

* . . | |

* . . | | ====

* . | | | |

* __.______|__|___|__|__

*/

if (MathUtils.GetLineIntersection(flappyLocation, pointSensorLimit, pointPipeLine1, pointPipeLine2, out PointF intersectionLeftSide))

{

double mult = MathUtils.DistanceBetweenTwoPoints(flappyLocation, intersectionLeftSide).Clamp(0F, (float)DepthOfVisionInPixels) / DepthOfVisionInPixels;

// this "surface" intersects closer than prior ones at the same angle?

if (mult < heatSensorRegionsOutput[LIDARangleIndex])

{

heatSensorRegionsOutput[LIDARangleIndex] = mult; // closest

intersection = intersectionLeftSide;

}

}

}

/// <summary>

/// Draws lines radiating, show where pipe was detected.

/// </summary>

/// <param name="graphics"></param>

internal void DrawWhereTargetIsInRespectToSweepOfHeatSensor(Graphics graphics)

{

using Pen pen = new(Color.FromArgb(60, 40, 0, 0));

pen.DashStyle = DashStyle.Dot;

// draw the heat sensor

foreach (PointF[] point in wallSensorTriangleTargetIsInPolygonsInDeviceCoordinates)

{

graphics.DrawLines(pen, point);

}

}

}

Controlling Flappy

Before we dig into the detail, let’s be clear on the basic process. Flappy has a mechanism to request flight upwards and will if desperate flap downwards too. You could turn the latter off, and assuming the course is “possible”, it will still work. It is also dragged down by gravity like the user version.

Flappy is responding to its sensors. It “somehow” learns to avoid hitting the pipes, and moving into the gaps. An observation of how it typically achieves success is by returning to the vertical centre when not having to avoid anything, thus giving it time to react. This is partly an artefact of Flappy’s limited vision. As a human, you would be consciously planning ahead, getting ready to change direction.

As with AI in general, Flappy has no idea of self, no idea it shouldn’t hit the pipes, it is clueless.

I just happen to discard all the Flappy Birds that fail to do what I want and after a few hundred generations, Flappy finds a way to complete the task. Despite all the cool visuals like the “brain” it’s difficult to see why it behaves the way it does. I don’t trust neural networks without someone providing a means to know what’s happening.

Flappy’s .Move() method is:

/// <summary>

/// Move Flappy.

/// </summary>

internal void Move()

{

if (!FlappyWentSplat) UseAItoMoveFlappy();

// Flappy does not have an anti-gravity module. So we apply gravity acceleration (-). It's not "9.81" because we

// don't know how much Flappy weighs nor wing thrust ratio.

verticalAcceleration -= 0.001f;

// Prevent Flappy falling too fast. Too early for terminal velocity, but makes the game "playable">

if (verticalAcceleration < -1) verticalAcceleration = -1;

// Apply acceleration to the speed.

verticalSpeed -= verticalAcceleration;

// Ensure Flappy doesn't go too quick (makes it unplayable).

verticalSpeed = verticalSpeed.Clamp(-2, 3);

verticalPositionOfFlappyPX += verticalSpeed;

// Stop Flappy going off screen (top/bottom).

VerticalPositionOfFlappyPX = VerticalPositionOfFlappyPX.Clamp(0, 285);

if (FlappyWentSplat) return; // no collision detection required.

// if bird collided with the pipe, we set a flag (prevents control), and ensure it falls out the sky.

if (ScrollingScenery.FlappyCollidedWithPipe(this, out s_debugRectangeContainingRegionFlappyIsSupposedToFlyThru))

{

FlappyWentSplatAgainstPipe();

}

}

It always subtracts 0.001 from the acceleration (to drag it down). The neural network applies an up or down acceleration based on the inputs; so it flaps down or up.

Flappy’s Neural Network (Perception + Mutation: size 24)

Input: PipeSensor.s_samplePoints + 2 (that is 1 per sensor + verticalSpeed + verticalAcceleration)

Hidden: PipeSensor.s_samplePoints + 2 (one per input)

Output: 1 (amount of flapping +/-)

As you can see, we request pipes in the immediate vicinity of Flappy. We pass that to the sensor and receive distances. In addition, we provide speed and acceleration.

You might be thinking my missiles didn’t need velocity or acceleration parameters, so why would Flappy? Because it doesn’t rotate. It’s not rotating out of trouble.

If you think about it the difference:

- If you can always rotate towards an object and move forward, where you start or are currently is irrelevant. You’re constantly adjusting to the target.

- When you have to move up or down, and you already have a velocity, you could overdo it and speed into the object you’re trying to avoid.

Don’t take my word for that, set it to 0 for both and watch it do very unsuccessfully. I suspect you’re guessing I have already done that.

/// <summary>

/// Provide AI with the sensor outputs, and have it provide a vertical acceleration.

/// </summary>

private void UseAItoMoveFlappy()

{

List<Rectangle> rectanglesIndicatingWherePipesWerePresent = ScrollingScenery.GetClosestPipes(this, 300);

// sensors around Flappy detect a pipe

sensor.Read(rectanglesIndicatingWherePipesWerePresent, new PointF(HorizontalPositionOfFlappyPX + 14, VerticalPositionOfFlappyPX), out double[] proximitySensorRegionsOutput);

// we supplement with existing speed and acceleration

List<double> d = new(proximitySensorRegionsOutput)

{

verticalSpeed / 3,

verticalAcceleration / 3

};

double[] outputFromNeuralNetwork = NeuralNetwork.s_networks[Id].FeedForward(d.ToArray()); // process inputs

// neural network provides "thrust"/"wing flapping" to give acceleration up or down.

verticalAcceleration += (float)outputFromNeuralNetwork[0];

// if enabled, we track telemetry and write it for birds that complete the course.

telemetry.Record(d, outputFromNeuralNetwork[0], rectanglesIndicatingWherePipesWerePresent);

}

Lastly, you’ll notice “telemetry.Record()”, I have built in a telemetry class that writes the data being used during training to a file. Set telemetry.c_recordTelemetry = true, and it will write telemetry as a .csv for each Flappy to c:\temp.

Please note: The files are 5mb or so (depending on the number of sensors)

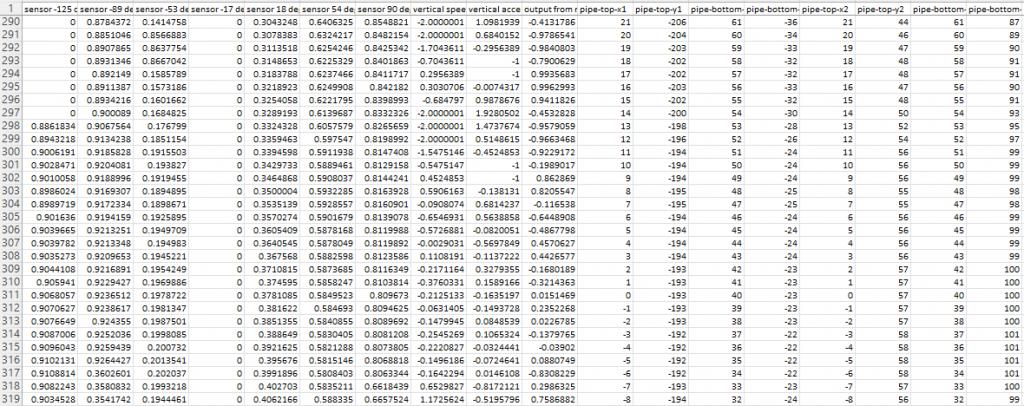

The telemetry is quite interesting, the format is as follows (if it’s set to 7 sample points).

sensor -125 degrees,sensor -89 degrees,sensor -53 degrees,sensor -17 degrees,sensor 18 degrees,sensor 54 degrees,sensor 90 degrees,vertical speed,vertical acceleration,output from neural Network,pipe-top-x1,pipe-top-y1,pipe-bottom-x1,pipe-bottom-y1,pipe-top-x2,pipe-top-y2,pipe-bottom-x2,pipe-bottom-y2,pipe-top-x3,pipe-top-y3,pipe-bottom-x3,pipe-bottom-y3,pipe-top-x4,pipe-top-y4,pipe-bottom-x4,pipe-bottom-y4Flappy is at the threshold of a pipe when pipe-top-x1 is 0.

This is useful if you want to analyse how Flappy saw the world.

It will take 300+ generations for Flappy to learn. I strongly suggest you press “Q” and let it do it in the background. (The “Visualiser” is not available in quiet mode, it also only works after at least 1 success).

Knowing how many Flappy Birds went splat, you can refer to the Flappy indicator panel. Please note: It doesn’t update when you’re running in quiet “Q” mode because that mode is designed to optimise learning and the speed Flappies go splat will slow down learning if the indicator updates.

Similarly when not in quiet mode you probably want to show the progress. I always believed that Flappy Bird is endless (well not when I am playing of course as I crash way too soon), but endless would not be good for learning. We need an “end”, and that is 20k pixels scrolled approximately. Given it’s a long course, I added the progress bar.

Success Rates

After sufficient training do not forget to press “S” to save the neural networks – otherwise all your learning will be lost. There is no auto-save.

How successful depends on how many sample points, min/max angle, difficulty as courses differ slightly, and Flappy stupidity and how the random network initially configures itself.

I’ve seen as bad as 50% game completion. “Is that all?” you might reasonably ask. I’ve also had this 99.88%. The second number below is the number of successful runs.

Whilst creating the random pipes it endeavours to make spacing vertically achievable despite reducing gaps between columns of pipes. I cannot be sure. What I did see is with one neural network configuration it failed at the very last pipe when there is nothing to hit… The problem with that training was that the forward sensors returned “no pipe” and the Flappy is puzzled about where to head; the rear-facing sensor doesn’t stop it from crashing into the top-right-most corner of the bottom of the last pipe.

I only discovered after adding logic to Form1 c_takeSnapShotWhenFinalFlappyGoesSplat = true; showing how the best Flappy failed.

Please don’t leave it on, unless you want to fill the temp folder with images, there will be one per generation.

Mutation

We use the usual simple approach:

- Create 24 brains (neural networks)

- Repeat

- Create a new random designed course

- Create 24 Flappy Birds, and assign one brain to each bird

- While at least one Flappy Bird hasn’t gone splat and we haven’t reached the end of the course

- Scroll screen right-to-left

- Move all Flappy Birds that haven’t gone splat, detect collision

- Draw scenery + all remaining Flappy Birds

- End While

- Rank the brains

- Mutate the brains of the bottom-performing 50% (clone from the top 50% + mutate after)

- Until the user stops game

I.e., a mutation occurs when the last Flappy goes splat or reaches the end.

Ranking each of the twenty-four Flappy Birds is easy.

We could overcomplicate it giving bonus points for how accurately it passes each pipe. Or the least number of flaps. But unless the scrolling moves at a constant speed, the accuracy isn’t important as long as they don’t hit the pipe.

I settled on scoring the bird based on the number of pipes it passed through as this is an indicator of success, after all, we want to reward them for getting to the end.

Spoiling The Magic

Controlling Flappy can be distilled down into the following formula (or ones similar, the neural network outputs code upon a successful course):

double amountToFlap =

Math.Tanh(

(-1.4203500014264137 * Math.Tanh(

(-0.9161000289022923 * sensor[0]) +

(-0.611900033429265 * sensor[1]) +

(0.7353999973274767 * sensor[2]) +

(1.395649983547628 * sensor[3]) +

(1.9139500185847282 * sensor[4]) +

(-0.14895003254059702 * sensor[5]) +

(-0.9505500338273123 * sensor[6]) +

(-1.87949996907264 * sensor[7]) +

(0.21834999416023493 * sensor[8]) + 0.13004999933764338)) +

(0.4461000319570303 * Math.Tanh(

(0.12650000280700624 * sensor[0]) +

(-0.4555000034160912 * sensor[1]) +

(-0.15545000787824392 * sensor[2]) +

(-0.9326000025030226 * sensor[3]) +

(-0.1301999855786562 * sensor[4]) +

(0.23894996475428343 * sensor[5]) +

(-0.42789997695945203 * sensor[6]) +

(0.7116500071133487 * sensor[7]) +

(-1.5234499489888549 * sensor[8]) -1.3482999894768)) +

(1.8684499984956346 * Math.Tanh(

(-1.2429999504238367 * sensor[0]) +

(0.9539999859407544 * sensor[1]) +

(-1.2533000051043928 * sensor[2]) +

(0.9902000176371075 * sensor[3]) +

(1.1793999848887324 * sensor[4]) +

(0.36714999191462994 * sensor[5]) +

(-0.14574995008297265 * sensor[6]) +

(2.1966000124812126 * sensor[7]) +

(-0.8536500030895695 * sensor[8]) -0.23089998168870807)) +

(-0.39680001325905323 * Math.Tanh(

(-0.7766000169795007 * sensor[0]) +

(0.07210001489147544 * sensor[1]) +

(0.955850007943809 * sensor[2]) +

(0.14670000411570072 * sensor[3]) +

(-0.6008500047028065 * sensor[4]) +

(0.5103499982506037 * sensor[5]) +

(-0.23594999290071428 * sensor[6]) +

(0.22559998405631632 * sensor[7]) +

(-0.053349982714280486 * sensor[8]) +0.1727499896660447)) +

(-0.7142500011250377 * Math.Tanh(

(-0.10849996842443943 * sensor[0]) +

(-0.2582500067073852 * sensor[1]) +

(-0.9652000372298062 * sensor[2]) +

(0.9802500084624626 * sensor[3]) +

(-0.616550026461482 * sensor[4]) +

(-0.1527500133961439 * sensor[5]) +

(-1.0646500225993805 * sensor[6]) +

(-0.166000010445714 * sensor[7]) +

(-1.6115999950561672 * sensor[8]) -0.29739995021373034)) +

(-0.5814500080887228 * Math.Tanh(

(1.141599980648607 * sensor[0]) +

(-0.8232000153511763 * sensor[1]) +

(-0.7003499884158373 * sensor[2]) +

(-1.3289500038372353 * sensor[3]) +

(-0.15385000238165958 * sensor[4]) +

(1.5128999715670943 * sensor[5]) +

(-1.1714499769732356 * sensor[6]) +

(-0.5582999929320067 * sensor[7]) +

(0.44350002211285755 * sensor[8]) -1.1334499893710017)) +

(-0.38159998157061636 * Math.Tanh(

(-1.2336000157520175 * sensor[0]) +

(-1.087299982085824 * sensor[1]) +

(-1.6573000140488148 * sensor[2]) +

(1.6813500076532364 * sensor[3]) +

(-2.244700034148991 * sensor[4]) +

(-0.5994500103406608 * sensor[5]) +

(-0.5733500029891729 * sensor[6]) +

(-0.585400010459125 * sensor[7]) +

(0.6281500160694122 * sensor[8]) +0.6173499904107302)) +

(1.309150030836463 * Math.Tanh(

(-1.7332499884068966 * sensor[0]) +

(1.020400000968948 * sensor[1]) +

(-0.33154998952522874 * sensor[2]) +

(-0.8597500051546376 * sensor[3]) +

(0.9789999946951866 * sensor[4]) +

(1.2303500140842516 * sensor[5]) +

(0.03680000500753522 * sensor[6]) +

(-0.2814499754458666 * sensor[7]) +

(0.7149500288069248 * sensor[8]) -1.866950006224215)) +

(-0.5792999924742617 * Math.Tanh(

(0.6613000135403126 * sensor[0]) +

(-0.15074998699128628 * sensor[1]) +

(1.3415999924764037 * sensor[2]) +

(-1.2409999808296561 * sensor[3]) +

(-0.44244999811053276 * sensor[4]) +

(-0.12360000982880592 * sensor[5]) +

(-0.47014998737722635 * sensor[6]) +

(0.712799995962996 * sensor[7]) +

(-0.806600034236908 * sensor[8]) +0.7416999973356724)) +

-0.2929500201717019);

No, I am not joking. Test it out for yourself. Comment out the #define USING_AI in Flappy.cs.

Feel free to stare at the code in bewilderment. Am I a mathematics genius? No. Sadly not. I trained Flappy, and at the end of each successful run, I had it save the neural network as code. Yes, I cheated.

In the same way that linear regression crafts a formula, the Flappy Birds’ success boils down to linear regression. For inputs, it has to provide the right output. i.e. a perceptron neural network boils down to:

TANH( ( input1*weightForInput1+input2 * weightForInput2

...

input999*weightForInput999 )+bias )Where input1 could be a raw input or another perceptron.

You could express a huge neural network in this format. It’s fine for feed-forward, but back-propagation requires the intermediate values that are not exposed in this form.

Of course, humans cannot compute this 100 times a second. Yet there are still humans that can play expertly at Flappy Bird.

Ponder for a moment: humans can pick up Flappy Bird quickly. Some people are less skilled at hand-eye coordination, but it is not taxing in the slightest. Like most species we somehow adapt in real-time; usually improving with subsequent runs. Whilst not playing it we can come up with strategies and review our mistakes. Sleeping often yields better performance on future attempts.

As clever as ML or whatever folks want to call it seems, it’s not learning. It’s finding optimum values in a formula. Change the game even slightly and it doesn’t adapt. Even if it did, learning by seeing every possible scenario isn’t practical as Tesla proved with this example.

After posting the remaining applications I have prepared, I want to focus more on a framework for real learning. Which I will share of course.

I hope you found this post insightful and that it inspired you to try your hand at AI/ML games. If you have suggestions, feedback or corrections please comment.