What makes the vehicles drive?

It requires surprisingly little – just a small/simple perceptron neural network using genetic mutation. The size and layers are configurable as we’ll discuss shortly.

We create a configurable number of vehicles, all starting from the same spot on the start line.

Each vehicle moves based on the output of its own neural network. To take action, the neural network requires inputs which in this case are in the form of 1 or more sensors. Again this is configurable (how close, how many, what angle). We don’t provide things like angle, location on the track, speed etc.

Depending on the vehicle, it may have a single throttle (accelerator) or dual throttle (in the case of our tank).

The “VehicleDrivenByAI” class supports a “CarImplementation” property which is where we assigned CarUsingBasicPhysics, or CarUsingRealPhysics; and “Renderer” property.

VehicleDrivenByAI contains the core vehicle functionality, including the methods .Move() and UpdateFitness(). To move it uses its own neural network accessed by its “Id”.

/// <summary>

/// Called at a constant interval to simulate car moving.

/// </summary>

internal void Move(out bool lapped)

{

lapped = false;

// if the car has collided with the grass it is stuck, we don't move it or call its NN.

if (HasBeenEliminated) return;

ApplyAIoutputAndMoveTheCar();

// track what the car was doing (intent and position/forces/acceleration etc)

if (CarImplementation.LapTelemetryData is not null) TelemetryData.Add(CarImplementation.LapTelemetryData);

// having moved, did the AI muck it up and drive onto the grass? If so, that's this car out of action.

if (Renderer.Collided(this))

{

Eliminate(EliminationReasons.collided); // stop car as it hit the grass

return;

}

if (c_hardCodedBrain) return; // gates are irrelevant, it's not learning

// the car hasn't crashed, so let's see if it reached a gate....

HasHitGate = false;

// When it crosses a gate, we update the gate for the car

// From this "progress" indicator, we judge the fitness/performance of the network for that car.

if (LearningAndRaceManager.CarIsAtGate(this, out int gate, out lapped))

{

CurrentGate = gate;

HasHitGate = true;

// track for cars that regress (backwards thru gates) so we can eliminate them. This is done by pretending it crashed.

if (LastGatePassed > CurrentGate || CurrentGate - 30 > LastGatePassed)

{

CurrentGate = -2; // dump it at the bottom for mutation, as punishment for going the wrong way

Eliminate(EliminationReasons.backwardsGate);

}

else

LastGatePassed = CurrentGate;

}

}

The AI applied like this. The “VisionSystem” sensor output is requested for a given angle and location. It returns the sensor outputs. It can nearly support additional “sensors” like speed / direction / g-force. But the UI for configuring the AI would need tweaking to accommodate (as the input count is sensor based).

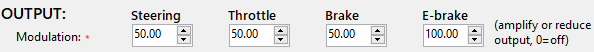

Output from the neural network is converted into a “carInputState” by applying a modulation value (multiplier), where that “output” is non-zero.

Lastly, it passes the state to the “.ApplyPhysics()”. This enables us to apply realistic or basic physics without a substantial fork in logic everywhere.

/// <summary>

/// Read the sensors, provide to the "brains" (neural network) and take action based on

/// the output.

/// </summary>

private void ApplyAIoutputAndMoveTheCar()

{

var AIconfig = Config.s_settings.AI;

double[] visionSensor = Config.s_settings.AI.VisionSystem.VisionSensorOutput(id, CarImplementation.AngleVehicleIsPointingInDegrees, CarImplementation.LocationOnTrack); /// input is the distance sensors of how soon we'll impact

// rather than have a rigid fixed inputs, we've built this to be expandable.

// The NN doesn't care how many inputs we have.

double[] outputFromNeuralNetwork;

double[] otherSensors = Array.Empty<double>(); // Speed/100, AngleCarIsPointingInDegrees/360 // TBD, current speed, current direction, etc

double[] neuralNetworkInput = CombineNNInputs(visionSensor, otherSensors);

// ask the neural to use the input and decide what to do with the car

outputFromNeuralNetwork = NeuralNetwork.s_networks[id].FeedForward(neuralNetworkInput); // process inputs

// e.g.

// outputFromNeuralNetwork[0] -> how much to rotate the car -1..+1 from TANH

// outputFromNeuralNetwork[1] -> the relative speed of the car -1..+1 from TANH. To minimise backwards travel, we add 0.9 and divide by 2 (1/10th speed backwards).

carInputState = new(

AIconfig.NeuronMapTypeToOutput[ConfigAI.c_steeringOrThrottle2Neuron] != -1 ?

outputFromNeuralNetwork[AIconfig.NeuronMapTypeToOutput[ConfigAI.c_steeringOrThrottle2Neuron]] * AIconfig.OutputModulation[ConfigAI.c_steeringOrThrottle2Neuron] : 0,

AIconfig.NeuronMapTypeToOutput[ConfigAI.c_throttleNeuron] != -1 ?

outputFromNeuralNetwork[AIconfig.NeuronMapTypeToOutput[ConfigAI.c_throttleNeuron]] * AIconfig.OutputModulation[ConfigAI.c_throttleNeuron] : 0.8F,

AIconfig.NeuronMapTypeToOutput[ConfigAI.c_brakeNeuron] != -1 ?

outputFromNeuralNetwork[AIconfig.NeuronMapTypeToOutput[ConfigAI.c_brakeNeuron]] * AIconfig.OutputModulation[ConfigAI.c_brakeNeuron] : 0,

AIconfig.NeuronMapTypeToOutput[ConfigAI.c_eBrakeNeuron] != -1 ?

outputFromNeuralNetwork[AIconfig.NeuronMapTypeToOutput[ConfigAI.c_eBrakeNeuron]] * AIconfig.OutputModulation[ConfigAI.c_eBrakeNeuron] : 0,

AIconfig.NeuronMapTypeToOutput[ConfigAI.c_steeringOrThrottle2Neuron] != -1 ?

outputFromNeuralNetwork[AIconfig.NeuronMapTypeToOutput[ConfigAI.c_steeringOrThrottle2Neuron]] * AIconfig.OutputModulation[ConfigAI.c_steeringOrThrottle2Neuron] : 0

);

CarImplementation.ApplyPhysics(carInputState);

}

Renderers are sub-classed from VehicleRendererBase. The base class provides collision detection, rendering, and ghost cars (eliminated). Whereas the sub-class provides methods for “.Draw()” and “.RawHitTestPoints()” because each vehicle is different in look and hit points.

We need to score how far each vehicle managed to drive before going off the track. The top performing 50% are kept, and the bottom performing 50% are discarded and replaced with clones of the top 50% but mutated.

By doing this, over multiple generations it should trend towards better vehicles. The result isn’t that all will be perfect, that’s not how mutation works. Although the trend is “better” with each generation after a mutation some perfectly good ones will turn into poor ones, like the one that spins on the spot.

We could clone the single top-performing car into all bottom-performing cars, but this isn’t necessarily a good idea at the start. A mutational tweak to one of the other cars may well yield a better-performing car than a tweak to the top car.

You may be thinking why not include time in the ranking, that would be a good question. The simple answer is that a faster car will reach further before we mutate, and therefore we are favouring faster networks/vehicles.

Determining how far the vehicle drove

Even if a virtual odometer existed, the vehicle could drive in circles, and that still registers as distance.

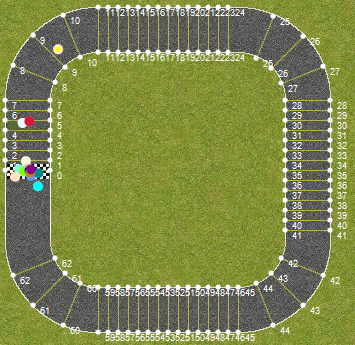

I started off solving this using proximity to “waypoints” (“blobs” around the track). It proved impractical, what we needed were lines that go through the track that we will call “gates”. Whilst we can visually turn them off, they are always present otherwise we cannot rank the cars. The initial build used polygons because computing gates is easy as drawing a radius from the centre.

As you can see the blobs start at gate 0, and for this track need to pass gate 62 to complete a circuit. But we have to be careful. A vehicle going the wrong way wrong the track from the start would hit 62 and be ranked higher. We thus have to penalise vehicles for going backwards!

The Process

- Create #numVehicles neural networks per configured values

- While not the end of the world…

- Create #numVehicles vehicles at the same start position, and associate with a neural network. 1:1 relationship for vehicle <==> neural network.

- While at least one vehicle is not eliminated and no vehicles have done 2 laps

- For each vehicle

- If the vehicle is eliminated, skip this vehicle (move to the next one)

- Move vehicles based on output from their neural network

- If the vehicle hit the grass, eliminate the vehicle

- If the vehicle has completed laps increment #laps for this vehicle

- If the vehicle went through the next gate, increment #gates-passed

- If the vehicle went backwards (regressed in gate number), eliminate the vehicle

- End For

- For each vehicle

- End While

- Rank cars in order of #gates-passed

- Sort cars by rank (most gates passed is the top performing 50%)

- Replace bottom performing 50% with a clone of a top performing 50%

- Mutate the bottom 50% slightly

- Destroy all vehicles, but not their neural networks.

- End While

To accelerate learning, there is a little step not covered above, and that is to bring forward earlier mutation. I want to fill the top 50% with the best vehicles I can early on. Without cloning the single best into all the worst, it takes time for the top to fill up with half-decent vehicles. Given we actively chose not to copy 1 to all, we do it a different way. We have a fixed number of moves by the cars before we stop proceedings and force the mutate. Each time we let them run a little longer (e.g 10%). This improves the rate of learning.

A lesson to all is that solving a problem using mutation doesn’t necessarily equate to optimal.

The cars below navigate except they have acquired a rather undesirable behaviour of spinning to achieve it. This happens because our algorithm favours who got the furthest. That selection process doesn’t pick the one that drives optimally.

The top-view jumps a little, because it’s switching between who is in the lead, and spinning makes that erratic.

Exhibit B of the “explain this please” variety… Yes, they are spinning 360 degrees their whole way around the track!!

If you look at what it’s doing it becomes a little clearer. The 4 outputs are intentionally configured in a crazy way (massive brake and e-brake force).

The hidden layer right-hand neuron is toggling +/- in response to the inputs (mostly 3rd from right). It’s yanking the e-brake and stamping on the brake repeatedly. This is presumably causing the rotation. Whether it’s less than realistic physics, you decide.

Another problem, is the further the cars go the slower the training rate. If a car takes half second to complete 25 gates, then 50 gates will take 1 second. So the learning rate slows as it learns to get further around the track. Even with “quiet” mode (not painting the UI), it will always take longer the more gates it goes through.

Solving it isn’t without challenges. You can’t continue where it failed, because there is no guarantee any mutation that completes the rest of the course is able to complete the start of the course…

On the next page (3) we’re going to cover some of the items that can be configured.