If you’re from the Netherlands, you may not understand the concept of “potholes” given the quality of your roads, despite that feel free to laugh if you want.

Aside from the spiralling cost of food, gas and petrol in the UK resulting from an ongoing war in Europe (you may have seen in the papers?), apparently the very stuff that we use to repair roads is also affected.

In keeping with British humour, I, therefore, present cars that dodge potholes.

Ok, this isn’t going to compete with Tesla, but at least it doesn’t hit a bollard (about 48 seconds in). Nor parked emergency vehicles with flashing lights. I am not jealous, even if I could afford a Tesla, thanks to a certain event I cannot afford to charge a Tesla at today’s electricity prices!

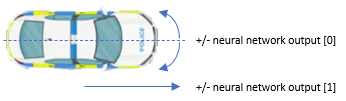

As can be seen below, when all but one car crashes, it shows the neural network for the remaining car. And just for fun, I turned on the LIDAR.

I’ve kind of given the game away in that last sentence. Another(!) of my applications uses a sensor… This time it is used to avoid rather than hit things. What you can say about this car, is certain sensors affect the car more than others (larger).

Training

It trains itself. We put the cars on a scrolling road with an abundance of potholes. The worst performing 50% of cars get replaced (cloned from the top 50% but with a small mutation). Yes, you guessed it, this uses genetic mutation.

As you can visually see the neurons and can count, you’ll know the setup for this one is:-

- Input: 17, one for each LIDAR sensor

- Hidden: 0, yes, this is a too easy task to expend on hidden neurons

- Output: 2, (1) the amount to rotate the car (2) the speed

There’s no complex physics for the cars. We could absolutely clone them from the car/tanks posting that I built first, and have yet to blog. You’ll notice from the code that the LIDAR is fed into the neural network, and the output is used to change the car’s speed and angle.

/// <summary>

/// Unreal "physics" of car, but sufficient for the AI demo.

/// output[1] from AI determines what speed the car travels

/// output[0] from AI determines how much to *rotate* the car.

/// </summary>

/// <param name="road"></param>

/// <param name="viewPortX"></param>

internal void Move(Bitmap road, int viewPortX)

{

ConfigAI aiConfig = Settings.Config.s_settings.AI;

double[] neuralNetworkInput = s_vision.VisionSensorOutput(road, AngleInDegrees, new PointF(Location.X - viewPortX, Location.Y)); /// input is the distance sensors of how soon we'll impact

// ask the neural to use the input and decide what to do with the car double[] outputFromNeuralNetwork = NeuralNetwork.s_networks[Id].FeedForward(neuralNetworkInput);

// process inputs

// a biases of 1.5 ensures the car has to move forward, and not stop.

// so technically the AI decides whether to slow down to minimum speed or speed up.

float speed = 1.5F + ((float)outputFromNeuralNetwork[ConfigAI.c_throttleNeuron] * aiConfig.SpeedAmplifier).Clamp(0, 1);

// Remember: speed x angle determines where the car ends up next

AngleInDegrees += (float)outputFromNeuralNetwork[ConfigAI.c_steeringNeuron] * aiConfig.SteeringAmplifier;

// it'll work even if we violate this, but let's keep it clean 0..359.999 degrees.

if (AngleInDegrees < 0) AngleInDegrees += 360;

if (AngleInDegrees >= 360) AngleInDegrees -= 360;

// move the car using basic sin/cos math -> x = r * cos(theta), y = r * sin(theta)

// in this instance "r" is the speed output, theta is the angle of the car.

double angleCarIsPointingInRadians = Utils.DegreesInRadians(AngleInDegrees);

Location.X += (float)Math.Cos(angleCarIsPointingInRadians) * speed;

Location.Y += (float)Math.Sin(angleCarIsPointingInRadians) * speed;

}

This is an example of what mutation can achieve.

We score the car based on distance travelled. Given ALL cars start at the same point and encounter the same obstacles, the one that went further was best. It takes a few generations (20 cars each) to come across weights/biases that work.

It can sometimes fail despite having got quite far in a previous run, and this is something I don’t like about learning via mutation. In common with a Tesla, it reacts to things it has experienced. Unlike the Tesla, however, it has a LIDAR not cameras. The LIDAR as coded has limited sensors, varying speed and combined with random potholes, it can cause the car to crash…

If you run it and it seems to not learn after a few minutes, re-run it. This is something I hate about using mutation – it’s dependent on finding good initial values.

The source code if you’re interested, is on GitHub.

Kudos to Elon Musk and team Tesla: I have nothing but respect and admiration of what they have achieved in a relatively short space of time, despite my earlier ribbing about a few fixable issues. Incredible AI, as long as we ignore this.

I hope this short post provided some amusement in these uncertain times. Post comments below, if you have questions or thoughts.