Switching GEDCOM Files (a surprisingly tricky business)

What comes next is far less obvious than the UI flow. In fact, it’s one of those problems that seems simple until you actually try to solve it – like assembling flat‑pack furniture or explaining blockchain to your parents.

Most users, I suspect, will pick a GEDCOM file and start chatting about it. Lovely. Straightforward. Civilised. But we can’t assume they only have one file. Genealogists are collectors by nature. They hoard family trees the way dragons hoard gold. Forcing them to quit the app just to switch files would be… well, rude.

So we have two clear options:

- The user explicitly asks to change the file.

- We provide a button or link in the UI to switch files.

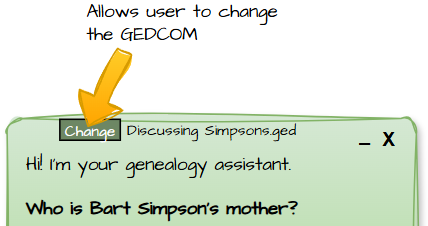

Option 2 is the classic, sensible, grown‑up approach:

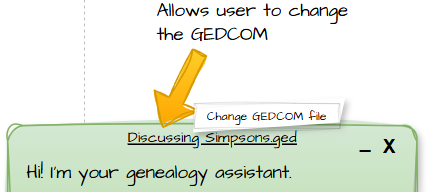

Or, if you prefer something sleeker, a hyperlink with a hover‑over hint:

Both are perfectly valid. Both are perfectly normal. But then there’s Option 1, which is where I decided to have a little fun.

How does one ask to change a GEDCOM?

If a user wants to switch files, what will they actually type?

- “Change GEDCOM”

- “New file please”

- “Switch GEDCOM”

- “Please change GEDCOM”

- Or something completely unexpected, like “Take me to the other tree, Jeeves”

It’s anyone’s guess.

So I thought: why not let an LLM decide whether the user is asking to switch files? After all, this is a genealogy AI project – if I can’t use AI for the fun bits, what’s the point?

But let’s be honest: this is not a long‑term solution. Tokens cost money, and if I’m paying for them, I’d rather spend them on actual genealogy reasoning than on detecting whether someone wants to click a button. If the cost or latency becomes noticeable, it instantly becomes a “why did you ruin your own product?” kind of idea.

Still, for the prototype, it was too tempting not to try.

The Flow (a.k.a. “Is this a question or a file switch?”)

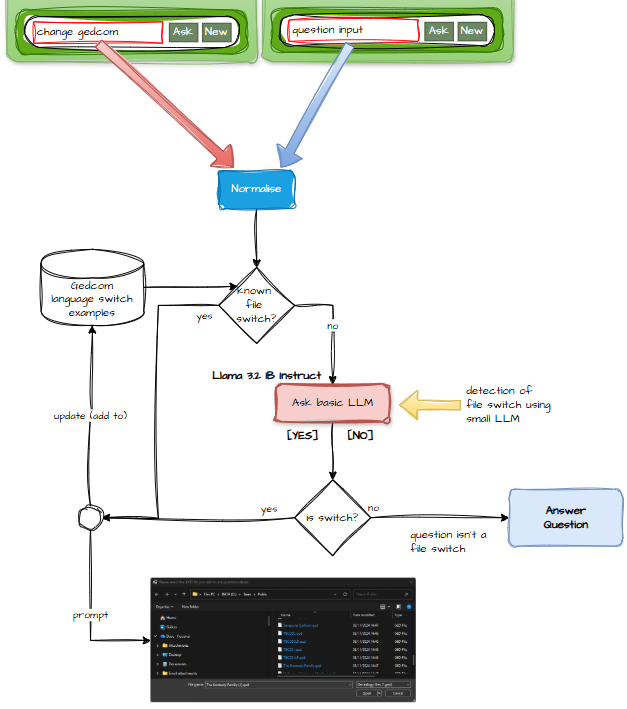

Here’s how the system decides whether the user is asking a genuine question or trying to hop to another GEDCOM:

- The user enters some text. It might be a real question. It might be a file‑switch request. It might be both.

- The text is normalised, token‑reduced, space‑trimmed, and synonym‑aligned so that “switch GEDCOM” and “change the family tree thingy” can be treated consistently.

- The normalised text is compared against a list of known file‑switch phrases. If it matches, we’re done.

- If not, we ask Llama 3.2 1B Instruct, a tiny, lightning‑fast model, whether the user is trying to switch files.

- If the LLM says “yes,” we store that phrase for next time (so the system gets smarter) and return “file switch requested.”

- If the LLM says “no,” we treat it as a normal question.

It’s a neat little hybrid: part rules‑based, part LLM‑powered, part “let’s see what happens.” And for a prototype, it works surprisingly well.