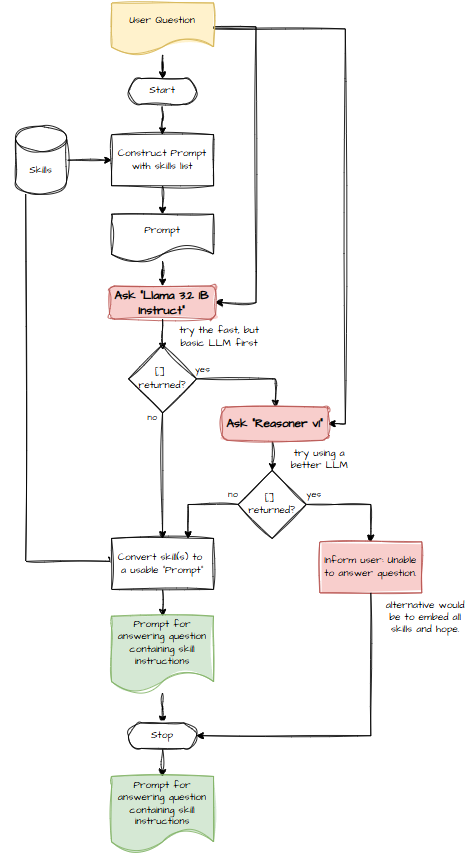

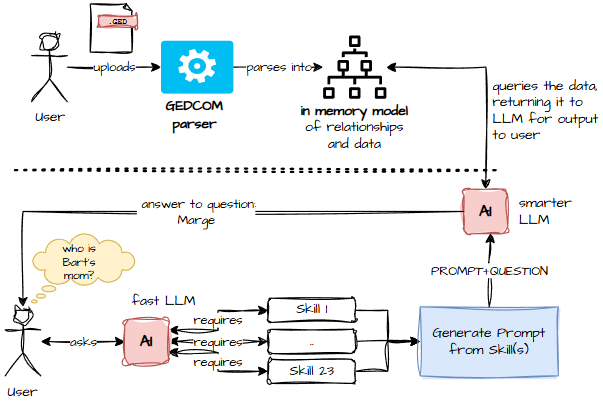

The Magic Skill Selector

Imagine you have 23 experts sitting in a room. You ask a question. You need exactly the right experts to raise their hands. Not all of them. Not none of them. Just the ones who can help.

That’s the job of the skill selector.

My requirements were simple:

- Number the skills 1..n

- Ask a small model to return an array of relevant skill numbers

- Example:

[1, 3]

After some experimentation (and a surprising amount of swearing), I found that Llama 3.2 1B Instruct was brilliant at this. It’s tiny, fast, and crucially, cheap. Perfect for a yes/no‑style classification task.

I wrote a prompt that:

- Defines the role

- Specifies the output format

- Gives positive and negative examples

- Leaves zero room for ambiguity

Your role is a skill classifier. You must decide which skills apply to the user question by

reading each skill and adding to the list if it applies. Return the list (0 or more) that match.

Return as a comma-delimited list within square brackets. Do not use knowledge or code.

Skills:

1. provides answers to child born after a year.

2. get the age of a person, or how old they would be if still alive.

..

23. answers queries about the individuals associated with a specific place name.

Example:

"Who is Bart's father?" reply with [18].

"Which county is closest to Oxfordshire?" reply with [12].

"How many children did Homer have? Which was born closest to 1971?" reply with [11, 10].And it worked. Mostly.

But what if it returns… nothing?

Ah yes. The existential dread of every engineer working with LLMs.

Sometimes the model just shrugs and gives you an empty response. Not helpful.

When that happens, I have three options:

- Tell the user I didn’t understand the question Honest, but frustrating.

- Include all 23 skills Token‑expensive and likely to confuse the LLM.

- Ask a smarter model to pick the skills More accurate, still cheap, and gives the user the best experience.

Option 3 wins most of the time. It’s the equivalent of asking a senior engineer for help instead of pretending you know what you’re doing.