When Answers Go Wrong

After working with Llama and Reasoner, I can confirm: they are capable of producing answers that make you question your life choices. The fix depends on your patience, your token budget, and your tolerance for nonsense.

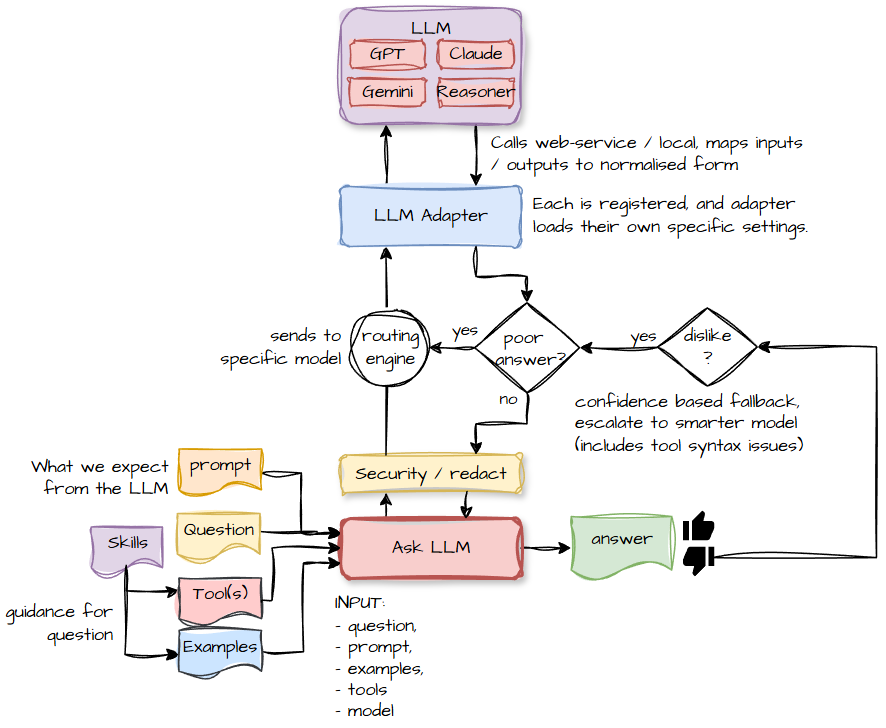

By making the app capable of using any LLM via an adaptor, we can route questions intelligently. Some queries are simple enough that even a small model can handle them. Others need the big guns.

If the user clicks “thumbs down”, we can escalate to a more expensive but more capable model.

This isn’t cheating — it’s improving user experience.

We can even downvote our own answers. It’s not quite marking your own homework; the evaluator LLM doesn’t need to be as smart as the answering LLM. It just needs to check whether the answer actually… answers the question.

And here’s a fun trick: If you tell an LLM its answer is wrong and ask why, it often corrects itself. Even Llama does this. It’s like watching a child realise they’ve misread the question.