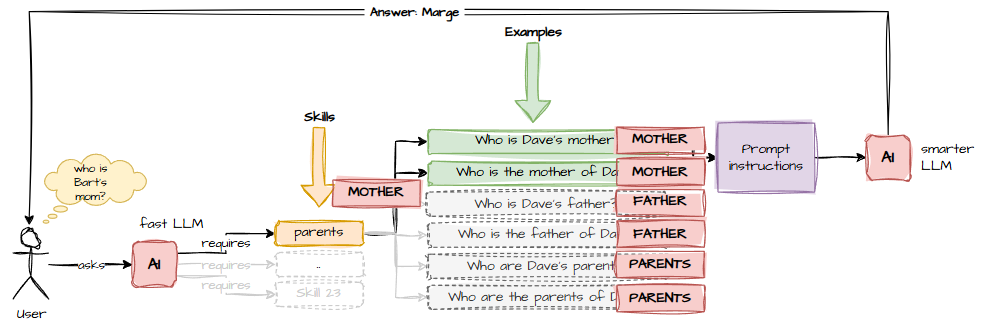

The Epiphany: Stop Matching, Start Classifying

Then, buried in a GPT transcript, I found this gem:

Instead of “which items are similar?”, think: What slot is this question filling?

TYPE = PARENTS | BIRTHPLACE | JOB | AGE | OTHER

Then route based on TYPE.

This is brilliant because:

- It’s simpler

- It’s more structured

- It’s easier for small models

- It avoids fuzzy semantic similarity

- It reduces the problem to a single label

And because everything GPT says is brilliant and works.

So I tried it.

Test 1: Parent Questions

Classify this question into exactly one of: MOTHER, FATHER, OTHER.

Return only the label.

User: "Who is Bart's mom?"

→ MOTHER

User: "Who is Bart's dad?"

→ FATHER

User: "Where was Bart born?"

→ OTHERSuccess!

I could work with this.

Then I added PARENTS.

And Llama said:

Cue the sound of my head hitting the desk.

Undeterred, I tweaked the prompt:

Pick the most specific.And suddenly:

- “Who is Bart’s mom?” → MOTHER

- “Who are Bart’s parents?” → PARENTS

Victory! (Temporary, hollow victory, but still.)

As someone who is neurodivergent, I feel qualified to say this: working with Llama is like giving instructions to someone who takes everything literally. It will follow your words precisely – and miss the meaning entirely.

Extending the Approach: Multi‑Label Classification

Sometimes you want multiple labels. For example:

- “Who are Bart’s parents?” could reasonably return MOTHER, FATHER

- And you might attach both examples to the “parents” skill

So I tried:

If it matches multiple, provide a comma‑delimited list.And yes, it returned MOTHER, FATHER instead of PARENTS.

This is fine, as long as you understand how the model behaves and route accordingly.