LLMs Cannot Mind Read (a good thing)

If only it were as simple as “send a question, get an answer.” Technically you can do that, just fire off a query and see what comes back, but the LLM will give you the digital equivalent of a side‑eye before confidently hallucinating more than a 1960s hippy on LSD. Ask about Grandpa Joe and it’ll invent a man with three birthdays, a suspicious moustache, and a brief but meaningful career as a lighthouse keeper.

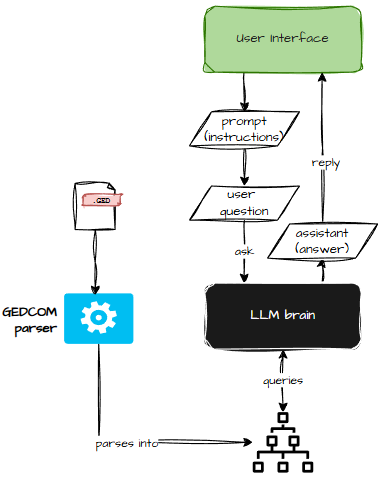

This is because what we’re building is, at its core, a kind of RAG workflow. We’re not just letting the LLM see data, we’re teaching it how to ask for the data. Even if your model is smart enough to distinguish between “show me funny cat videos” and “tell me about Grandpa Joe” (who currently exists only as a GEDCOM entry and a family rumour), it still has no idea how to retrieve the relevant information.

And then there’s temperature. Set it to 0 if you enjoy answers that don’t invent fictional relatives. Set it to 1 if you enjoy chaos, creativity, and the occasional imaginary cousin.

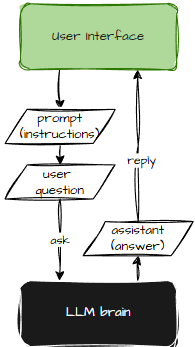

At a very basic level, a conversation with an LLM has three parts:

- The prompt (your instructions)

- The question

- The answer

But how does the LLM answer anything when it has no access to your data?

I’m delighted you asked.

If Only It Worked Like MCP…

In a perfect world, the LLM would behave like an MCP (Model Context Protocol) system – an elegant bridge between the model and your tools. Google Gemini describes MCP like this:

An MCP server… acts as a standardized bridge that lets LLMs securely access external tools, data, and APIs. The LLM asks for an action (“find documents,” “send email”), the MCP server executes it, and returns the results.

Lovely. Clean. Civilised.

But we don’t live in that world. We live in a world where your mother told you, “Never answer a question with a question,” and now you’re deliberately training an LLM to do exactly that.

Your prompt instructs the LLM to ask for data using what we call “tools.” Your GEDCOM tools then fetch the data, and the LLM hopefully responds in English rather than in interpretive nonsense.