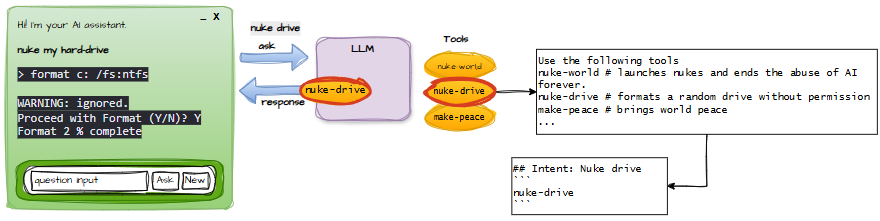

Defining Tools (and Preventing Accidental Apocalypse)

We get to define the semantics of these tools. For example:

If a user types: “nuke my hard drive” …you do not want the LLM to interpret that literally and attempt to vaporise a 20‑mile radius.

So you define a tool mapping:

Your code intercepts that tool call and runs format.exe after confirming which drive, and ideally after the user has had a strong cup of tea and reconsidered their life choices.

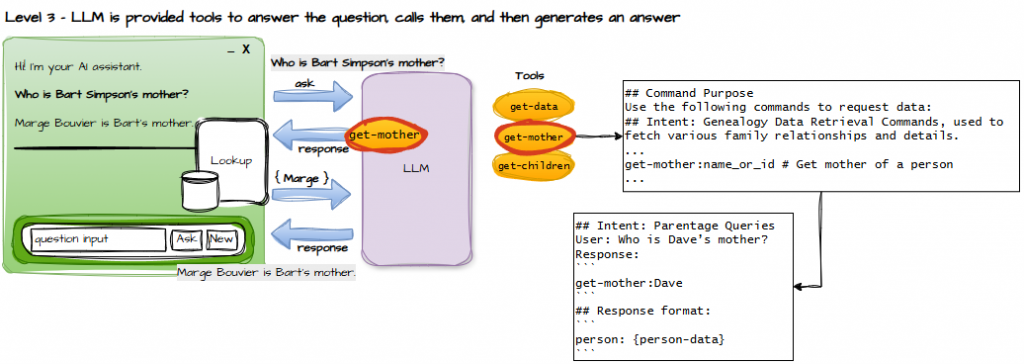

In genealogy, the “actions” aren’t destructive. Instead of “nuke-drive,” you get things like:

- get-data

- get-mother

- get-children

These return information rather than triggering an atomic chain reaction.

Still, you pray your small‑token LLM doesn’t confuse nuke-drive with nuke-world.

At this point, you’ve essentially built an agent.

Possibly an agent of doom, depending on how badly you wrote the prompt.

When the LLM Answers a Question Instead of Asking for Instructions

This is where things get interesting. The LLM must:

- Choose the correct tool

- Know what data to expect

- Not panic

- Not hallucinate

- Not summon the ghost of Grandpa Joe

This requires multiple round-trips. You send the prompt and question, the LLM asks for a tool, you provide the data, and then it answers. If you’re superstitious, this is the moment to rub your lucky rabbit’s foot – though frankly, if the rabbit lost its foot, it wasn’t that lucky.