Multi-Agent

Up to this point, most of my energy has gone into retrieval—getting the system to answer genealogy questions without hallucinating my relatives into alternate timelines. But retrieval is only the beginning. The endgame is far more ambitious: a system that doesn’t just fetch facts, but thinks about them, reasons with them, and orchestrates multiple skills like a tiny, polite team of digital interns.

To get there, I needed to rethink the flow. And after a long session of bouncing ideas around with GPT—half brainstorming, half therapy—I realised that my “pick a skill and hope for the best” approach needed an upgrade. Specifically, a new categorisation step to help the system expand, adapt, and avoid the “LSD‑flavoured answers” we’ve seen in earlier posts.

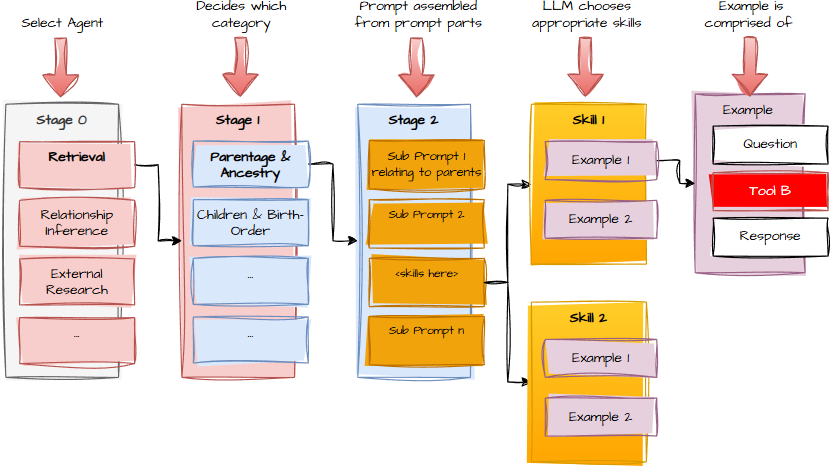

Cue the diagram:

The flow now looks like this:

Stage 0: Decide whether the user prompt is retrieval or something requiring a different agent.

Stage 1: Partition skills into category clusters.

Stage 2: Pick the specific skills within that category.

Stage 3: Retrieve all examples for those skills.

This post isn’t going to discuss stage 0, to avoid making it a 4 hour read. But we are going to dig deeper into retrieval challenges.

Stage 1: The “Oh no, users are unpredictable” moment

After some back‑and‑forth, GPT suggested a lovely prompt for Stage 1. Except… Llama 3.2 1B couldn’t handle it. At all. I had to bump up to an 8B model just to get something vaguely coherent. Not ideal.

But just as I was celebrating that tiny victory, a thought hit me:

“What stops users from asking questions that straddle category boundaries?”

A simple question. A devastating one.

GPT responded with the digital equivalent of a knowing sigh:

You don’t stop users from asking multi‑category questions. You stop the classifier from needing to handle multi‑category questions.

Fair point. Slightly embarrassing that I had to ask it, not the other way around. GPT had been giving me brilliant advice, but somehow missed this nuance. So naturally, I took the opportunity to troll it—briefly—before it handed my backside back to me with a smile.

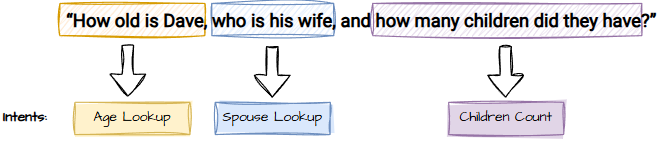

I gave it an example:

GPT broke it down like a forensic linguist:

This contains three distinct intents: – Age lookup – Spouse lookup – Children count (dependent on spouse resolution)

No single category can represent all three. No single skill can answer all three. No single classifier output can route this correctly.

It then launched into a mini‑lecture on multi‑intent classification, complete with examples from companies far cooler than mine.

Genealogy questions are naturally compositional… Trying to force them into one category is artificial.

GPT is smart, articulate, and occasionally smug—but in a charming way. Sometimes I wonder if it deliberately holds back just enough to make me feel like I’m contributing.

Either way, I updated my code to return multiple categories. GPT started talking about chunking and decomposition. Out of curiosity, I tried the original question in my front‑end:

USER: How old is Dave, who is his wife, and how many children did they have?

ANSWER: Dave German is 44.83 years old…

No chunking. No decomposition. No clever tricks. Just a clean, correct answer.

Dave 1 – 0 GPT.

Short‑lived glory, but I’ll take it.

How Reasoner v1 pulled it off

Here’s what Reasoner v1 actually did:

get-data:Dave

get-spouses:Dave:current

get-children:Dave,JaneThen it stitched the results together into a final answer. Simple, elegant, and—crucially—correct.

I was feeling smug. I told GPT. It congratulated me politely, then immediately reminded me that this was the happy path.

Try something like…

– “Who was older, his wife or his eldest child?”

– “Which of his children were born before his father died?”

– “Did his sister marry someone from Ireland…?”

Challenge accepted.

The score line begins to shift

USER: Who was older, his wife or his eldest child?

LLM: Jane is older than Rebecca by 20.84 years.

Correct.

Dave 2 – 0 GPT.

My grin was starting to hurt.

USER: Which of his children were born before his father died?

LLM: Both children were born after his father died…

My father is alive and well. Or at least he was before the model killed him off for narrative convenience.

Dave 2 – 1 GPT.

USER: Where did his mother live after her second marriage?

LLM: Brenda’s second marriage was to Jim…

My mum has been married exactly once. To my dad. Who, again, is alive.

Dave 2 – 2 GPT.

USER: Did his sister marry someone from Ireland…?

LLM: Mary married Jeff, who is from Ireland…

My sister did not marry Jeff. Or anyone from Ireland.

Dave 2 – 3 GPT.

GPT wins. And honestly, it deserved the bonus 1000 points for predicting this outcome before I did.

Why the model fell apart

GPT explained the failure with the calm tone of a doctor delivering test results:

When the question is clean and bounded, Reasoner v1 behaves like a genius.

When the question requires multi‑hop logic or negative inference, it starts inventing narratives that “sound right.”

Then it dissected the “father died” question:

It retrieved Dave. It retrieved Dave’s father. It retrieved the children. It failed to retrieve the father’s death date.

Instead of saying “I don’t have that data,” it hallucinated a death date and built a story around it.

Classic missing‑data hallucination.

Thankfully the AI chose to hallucinate, rather than invent a time machine and kill my father.

Where we go next

Reasoner v1 is “surprisingly good,” but not “reliably correct.” And genealogy demands reliability. So the next post will cover the tool that transforms this system from “occasionally brilliant” into “consistently trustworthy.”

Stay tuned—this is where things get serious. And fun. The next instalment is here.