Before we dig into the detail, let me show what is happening.

Step 1- get to Sevastopol – you can set waypoints, and it travels via them. No AI is necessary for that, simply ATAN2 to compute angles. Remember, don’t use AI for the sake of it. A simple GPS receiver would be used in practice, with exact programming coordinates obtained via something as simple as Google.

Step 2 – upon arriving at Sevastopol, search for the Makarov, rotating towards it.

The triangle shown is not a LIDAR that you see quite frequently on my blog, it is present only during “debug” so you can see where the ship thinks it’s “looking”. The “Makarov” is denoted by the line thru its centre. (Right mouse click, open in new-window will give you a better view).

Is this really using vision recognition? Yes. No smoke and mirrors!

Sadly I won’t win any awards, as YOLO would do a better job of vision detecting. But it is truly doing what I had hoped at the outset.

Please note:

- According to specifications on the internet, the drone boats can only travel 60 miles (96.5km).

- Odesa is 300km from Sevastopol. The closest point outside Crimea is about 96.5km, alas that’s as the crow flies and neglects to take into account that boats require water. So in reality, either the drones were launched from Crimea, or out at sea (how did the Russians not notice?).

- Speed x20 / x90. You’re bound to be impatient like me, and given drone boats only travel at 20.5 metres/second, to cover 300km, it’s going to take a while to arrive at the final waypoint (at 74km/hour = 4 hours) and none of us wants to wait for that, so I sped up the drone boat simulation.

- We are assuming the Makarov will always be perpendicular to the boat. In the days of cannons, they fired sideways, so this absolutely helped them to inflict damage. With modern weapons and fire control, this is wishful thinking. In reality, you’d need to contend with the fact the ship could be at any angle. Could we teach it that? Probably.

- For simplicity, we ignore the impact of waves acting on the boat, although correction would automatically happen, laterally. But the boat bobs vertically with the waves.

- Having perfect boat physics and a camera that sees an accurate image is less of a concern as this is a concept for fun, not a simulation.

- Our camera has a low resolution at 200×80 pixels (16,000), mostly because training is quicker with fewer pixels.

- It sees the “boat” but not the “ocean” because it captures the image before the Black Sea is painted below pixel 80 (81-120, to be precise).

Steering the Drone boat

Without a direction, the boat will head forwards.

The AI decides based on what the camera sees. Of course, not being a real camera, we have to fake the “camera” output.

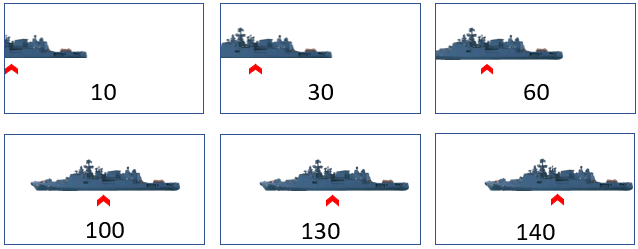

When the AI is provided with our camera as an input, it returns a pixel offset of where the boat is with respect to the camera. e,g., below.

Using the value returned, it decides how much we need to rotate the drone.

- “100” requires travelling head-on

- “10” requires hard steering to the left.

The code to do this is fairly simple:

/// <summary>

// Steers the boat using AI.

/// </summary>

internal override void Move()

{

droneBrainIsAttachedTo.CameraAtFrontOfDrone.TakeSnapShot();

Bitmap? cameraOutput = droneBrainIsAttachedTo.CameraAtFrontOfDrone.ImageFromCamera;

if(cameraOutput!= null)

{

locationCentreOfTargetWithRespectToCamera = (int)Math.Round(brain.AIMapCameraImageIntoTargetX(CameraToTargetBrain.CameraPixelsToDoubleArray(Edges(cameraOutput))));

// left of centre requires us to INCREASE the angle, right requires us to DECREASE

float angleToTurn = -(locationCentreOfTargetWithRespectToCamera - 100f)/100f * (float) Camera.c_FOVAngleDegrees;

if (!hasLocked && Math.Abs(angleToTurn) < 10)

{

hasLocked = true;

droneBrainIsAttachedTo.worldController.Telemetry($">> LOCK ON @ {angleToTurn}");

targetAngle = angleToTurn;

}

// avoid issues as we get very close, just keep on course.

if (hasLocked && Math.Abs(angleToTurn - lastAngleToTurn) > 15f)

{

angleToTurn = targetAngle;

}

lastAngleToTurn = angleToTurn;

droneBrainIsAttachedTo.AngleInDegrees = (droneBrainIsAttachedTo.AngleInDegrees + angleToTurn).Clamp(droneBrainIsAttachedTo.AngleInDegrees - 5, droneBrainIsAttachedTo.AngleInDegrees + 5);

}

}

Primarily this next line of code performs the magic. It converts the camera image to a double array of pixels and then asks the “brain” where it sees the Makarov.

locationCentreOfTargetWithRespectToCamera = (int)Math.Round(brain.AIMapCameraImageIntoTargetX(CameraToTargetBrain.CameraPixelsToDoubleArray(Edges(cameraOutput))));

There is a non-obvious optimisation – the concept of “lock on” enabling us to fill in for poor AI directions. If the angle deviates more than 15 degrees after lock-on, we ignore the AI and continue on the same path. If it was a “blip”, then we are already on target.

We also use Clamp() to ensure the drone doesn’t return too much each time (clamped at +/- 5 degrees), after all, boats cannot pivot instantly on the spot thanks to the laws of physics and drag from the water.

brain.AIMapCameraImageIntoTargetX() is the AI that converts an image into a position.

All sounds simple – right? Yes, but we haven’t explained how I trained it…

Creating Training Data

It’ll be no surprise that we need to associate a particular image with a meaningful course correction output.

We first need a ship renderer that draws the ship in any size with a transparent background; well 3 such renders in my case:

- A BasicRenderer() draws the ship as a filled polygon – the points for which were obtained using MSPaint cursor positions at specific points on the ship.

- A RealisticRenderer() draws the ship, which takes the Makarov photo, and turns it to greyscale (it’s night time).

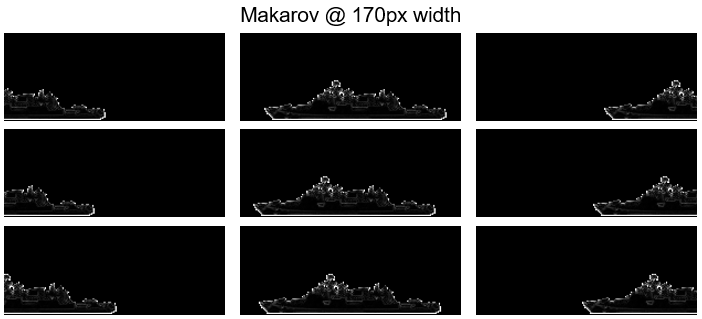

- An EdgesOfRealisticRenderer() draws the ship edges, by applying an “Edge Filter” (Roberts Filter) because we want to train the AI to learn the edges. Why? Because it removes the noise (filled-in regions) and focuses on shape.

The display of the ship is done using the realistic renderer.

Image recognition is done from that “realistic render”, despite being trained on edges! Cool, eh? Sort of, the AI applies an edge filter to the camera image, so it’s actually seeing what it was trained on. However, it only has 5 hidden neurons to achieve this. That in itself is pretty amazing.

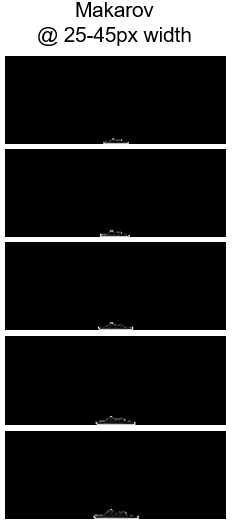

Training data is simply an exercise of creating one image per position of Makarow, for each size of Makarov.

/// <summary>

/// Create training data mapping image to position.

/// </summary>

/// <returns></returns>

private static List<TrainingDataItemImageToTarget> CreateTrainingData()

{

Debug.WriteLine("CREATING TRAINING DATA");

List<TrainingDataItemImageToTarget> trainingData = new();

for (float sizeOfBoat = 25; sizeOfBoat < 175; sizeOfBoat += 5)

{

for (float xCentreOfBoat = 0; xCentreOfBoat < Camera.CameraWidthPX; xCentreOfBoat++)

{

Bitmap boatImage = new(Camera.CameraWidthPX, 80);

using Graphics graphics = Graphics.FromImage(boatImage);

graphics.Clear(Color.Black);

ShipRenderer.EdgesOfRealisticRenderer(graphics, xCentreOfBoat, sizeOfBoat);

//boatImage.Save($@"c:\temp\sink\images\boat_{sizeOfBoat}_{xCentreOfBoat}.png",ImageFormat.Png);

trainingData.Add(new(CameraPixelsToDoubleArray(boatImage), xCentreOfBoat, (int)sizeOfBoat));

}

}

Debug.WriteLine("CREATING TRAINING DATA COMPLETE");

return trainingData;

}

To picture the training data, uncomment the //boatImage.Save(), and run it without the model. It will then write all 6000 training images to disk. (Remember to create a c:\temp\sink\images\ folder, before doing so.)

Here’s an example of what the AI is training off. Left/right is as it goes out of camera view.

And in each size…

With images created, the training becomes a simple back-propagation exercise:

/// <summary>

/// Training the AI.

/// </summary>

/// <param name="training"></param>

private void TraingNeuralNetworkUsingTrainingData(List<TrainingDataItemImageToTarget> training)

{

Debug.WriteLine("TRAINING STARTED");

int epoch = 0;

bool trained;

do

{

++epoch;

// push ALL the training images through back propagation

foreach (TrainingDataItemImageToTarget trainingDataItem in training)

{

neuralNetworkMappingPixelsToCentreOfShip.BackPropagate(trainingDataItem.pixelsInAIinputForm, new double[] { trainingDataItem.X / (float) Camera.CameraWidthPX });

}

trained = true;

// check every image returns an accurate enough response (within 5 pixels for large, 2 for small)

foreach (TrainingDataItemImageToTarget trainingDataItem in training)

{

double aiTargetPosition = AIMapCameraImageIntoTargetX(trainingDataItem.pixelsInAIinputForm);

double deviationXofAItoExpected = Math.Abs(aiTargetPosition - trainingDataItem.X);

if (deviationXofAItoExpected > (trainingDataItem.Size < 40 ? 2 : 5))

{

trained = false;

break;

}

}

Debug.WriteLine(epoch);

} while (!trained);

Debug.WriteLine($"TRAINING COMPLETE. EPOCH {epoch}");

}

That leaves us with the question of the network – it’s 200×80 (the size the camera “sees”), with 5 hidden layers, and 1 output (the position the boat needs to head (0=left,1=right).

readonly NeuralNetwork neuralNetworkMappingPixelsToCentreOfShip = new(new int[] { 200 * 80, 5, 1 });

The rationale for 200×80 is obvious as should be the output. So why pick “5” hidden neurons, why not 1, 10 or any other number?

A lower number of neurons didn’t seem to work, whereas 5 did. Given 200×80 is 16,000 inputs, the fewer neurons in the middle the better – as you’re connecting each of the 16,000 with each of the 5 hidden neurons it unsurprisingly takes longer to train.

Closing comments

I had a lot of fun with this post. The biggest challenges were building out the “environment”, and strangely not the AI.

I wasn’t sure if it was possible when I started, but it demonstrates how a simple neural network trained on 6000 images, can return the correct corresponding position of the centre of the ship.

There are still questions, that I don’t have time right now to test:

- Can it be trained to recognise the boat when it isn’t perpendicular? I would it’s harder than might be obvious – identification would require a comprehensive model from all angles.

- Can it be trained to ignore the waves? It is using just the ship on a 200×80 image, not the sea beneath it.

- If you look at the real footage the horizon isn’t always level, the ship leans left and right. Can such a simple network cope?

- How is the neural network working? The images vary widely.

I hope you enjoyed this post, if so please comment below.

The source code is now uploaded to GitHub.