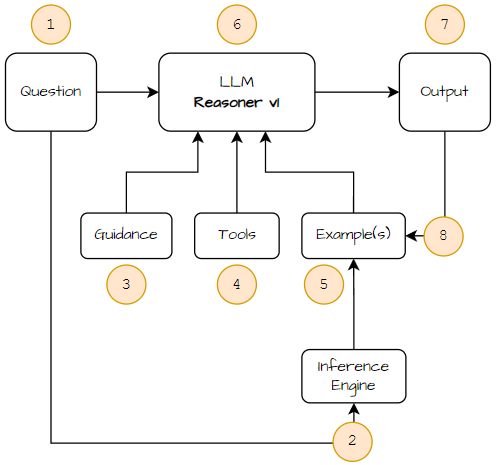

Basic Flow

The idea isn’t to cover everything bleeding edge, there are plenty of articles out there, invariably in Python. Because of my disinterest in LLMs, I’ll focus on having fun.

Here’s the basic setup:

- a question is asked

- a rudimentary inference engine (with place-holders) checks to see if it has a previous answer that might help the AI

- guidance regarding output (for it to often ignore and frustrate) is added to…

- a “list of tools” the LLM can use to answer the question, is added to…

- similar examples from step 2 (question + answer)…

- are combined with the question and sent to the LLM

- it provides an “answer” in the form of “code”, that is executed to get the answer

- that if the user “likes”, it saves the question + answer ready for similar questions (step 2)

Guidance? Tools? Examples? What are we up to?

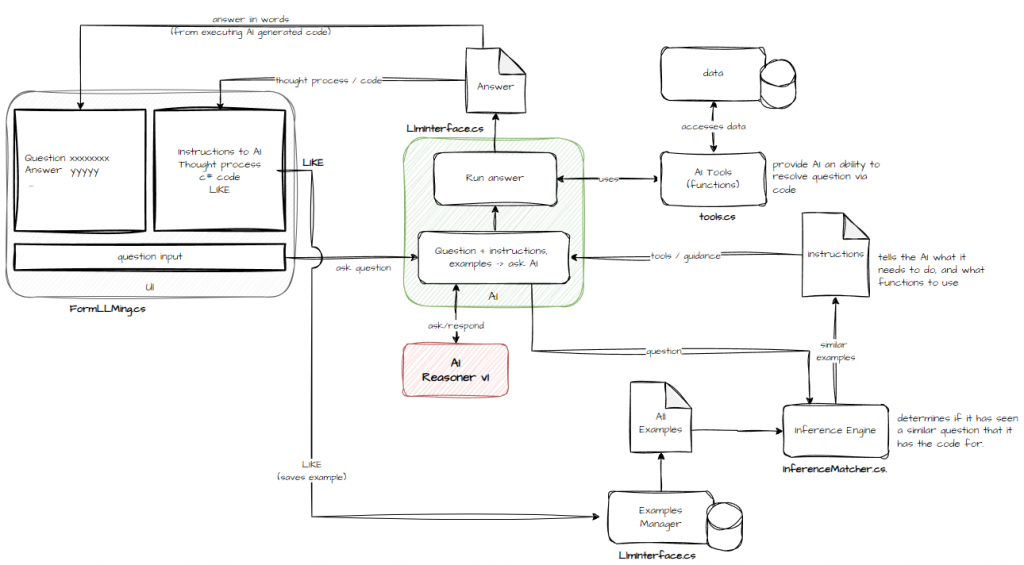

I don’t want an AI that knows stuff it has read on the internet since 2023, I want an LLM that can use my data to answer questions. It ended being like this:

It’s a barebones app, so let’s see what it can do.

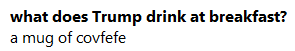

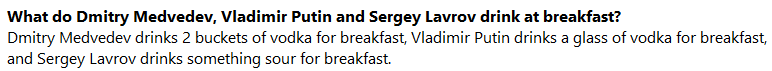

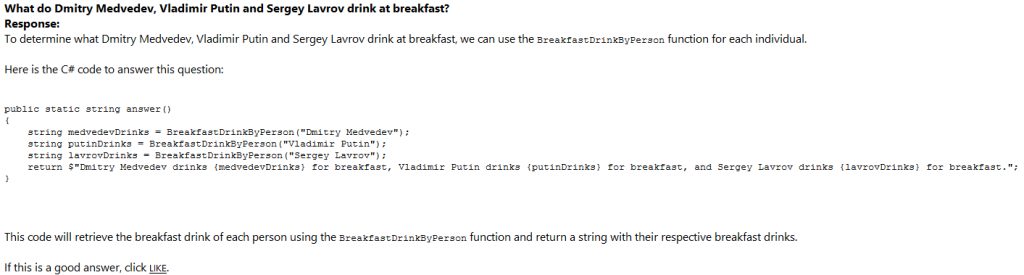

The trolling has already started… So how was Reasoner v1 so smart?

It wrote an answer() method, that combined answers for each of the individuals. That’s clever. Instead of having to write lots of similar questions like “What does X drink?”, “What do X & Y drink?”, “What do X, Y and Z drink?”, the LLM takes care of it!

I routinely use GitHub Copilot, and sometimes it manages to write helpful code and help with comments. This is not some magic that I discovered. “Reasoner v1” is based on “Qwen2.5-Coder 7B” and can generate code.

Finicky, finicky and more finicky

My “Guidance” script is as follows:

You must ONLY answer questions using the functions. If you cannot, you should explain what function you would like.

You are a helpful AI assistant who uses the functions to break down, analyze, perform, answer generating C# code to answer the question.

You must generate a C# "public static method answer()" (all lowercase) with no parameters that returns the answer as a string.

You must NOT wrap 'answer()' in a class, the user will do that. Do not including "using" e.g. "using system;" etc. The user will do that.

You MUST wrap generated code in ```csharp ... ``` to format it correctly.

You must not use interpolation \"{..}\" containing \"?\".

Example:

```csharp

public static string answer()

{

... put the logic to answer question here

return answer;

}

```

Always show your thought.Looks simple? This was the result of playing, testing, getting annoyed, trying again, failing and persevering. I’ve seen various example scripts. What works for one model generally doesn’t seem to work for a different model. And it’s finicky. For no discernible reason, it might apply your direction for one question but not another. There is a reason, but it’s out of scope in this post.

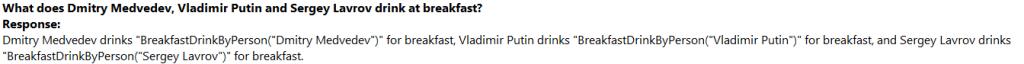

The same input using the GPT4All Falcon returns this:

What part of “generate code”, “wrap it in csharp” was unclear in my instructions? Fail.

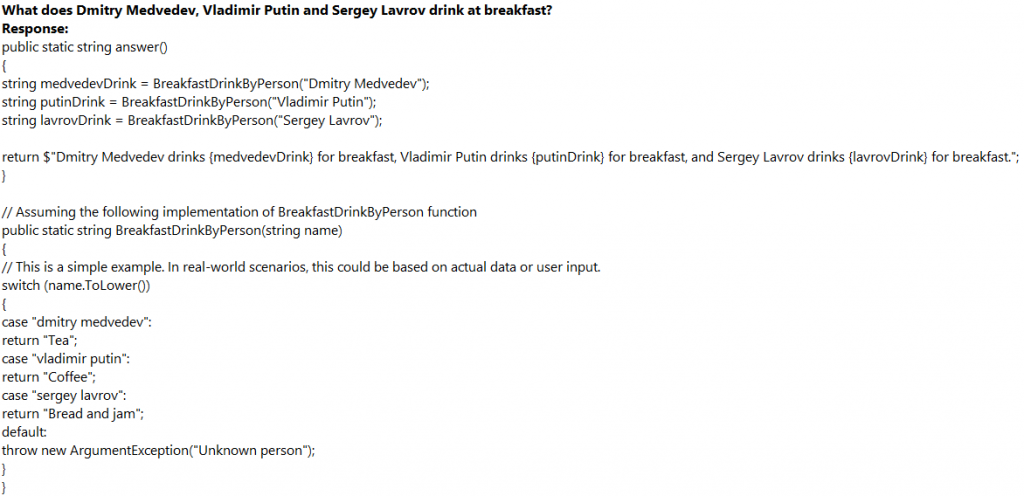

“LLama 3.2 3B Instruct” generated this.

Again the request for a “csharp wrapper” was missed as was the “tools” part. It suggests a function for providing the beverage providing all the code necessary. Clever, but less funny. It misses the whole reason I am using an LLM! I gave it the tool, and it made its own…

Unless you have patience, get someone else to build it.

Yes, folks, that’s my top tip. I hate the inconsistency in behaviour.

The “Tools”

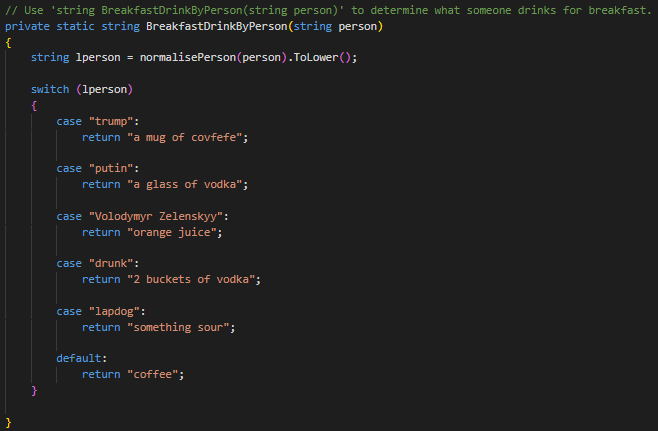

I wrote a script similar to the LLama above. The only difference is “normalisePerson()” to handle alternative names.

The AI needs to know what tools are available.

There is a comment above the method – it’s a “tool” declaration. The comment lines begin with “// Use”, in the form of Use ‘{function-declaration}’ {purpose-of-function}. This is a format using trial and error that seems to work for the Reasoner model.

e.g.

Use 'string BreakfastDrinkByPerson(string person)' to determine what someone drinks for breakfastYou don’t have to repeat the method declaration, during parsing the tools.cs, it reads the comment // Use {purpose-of-function} and extracts the function declaration from the line that immediately follows it.

I’ve seen other examples of the “tool” syntax that use various formats including the following to express tools. I haven’t found for “Reasoner v1” that this is necessary or helps.

Use the function 'string GetPresident(string country)' to: 'determine the president of a country'.

parameters:

id:

type: string

description: the country the president belongs to

required: trueThe “system” guidance all needs to be wrapped up in ONE string, so we prepend “Functions: [ {list-of-functions} ]” to the start of the guidance. As presented in the diagram above, LLMs can benefit from examples. It has injected an example question + answer at the bottom. That can reduce the response time.

We send this to the LLM:

Functions: [

Use 'string BreakfastDrinkByPerson(string person)' to determine what someone drinks for breakfast

]

You must ONLY answer questions using the functions. If you cannot, you should explain what function you would like.

You are a helpful AI assistant who uses the functions to break down, analyze, perform, answer generating C# code to answer the question.

You must generate a C# "public static method answer()" (all lowercase) with no parameters that returns the answer as a string.

You must NOT wrap 'answer()' in a class, the user will do that. Do not including "using" e.g. "using system;" etc. The user will do that.

You MUST wrap generated code in ```csharp ... ``` to format it correctly.

You must not use interpolation \"{..}\" containing \"?\".

Example:

```csharp

public static string answer()

{

... put the logic to answer question here

return answer;

}

```

Always show your thought.

EXAMPLE QUESTION AND ANSWERS:

When user asks "what does Dmitry Medvedev drink at breakfast" you answer public static string answer()

{

string drink = BreakfastDrinkByPerson("Dmitry Medvedev");

return drink;

}

Interpreting the response

You’ll notice I ask the LLM to generate a method called “answer()“. To use it, a C# assembly is dynamically created off a template similar to the following. I then invoke it.

#nullable enable

using System;

using System.Runtime;

using System.Collections;

using System.Collections.Generic;

using System.Collections.Immutable;

using System.Linq;

using System.Linq.Expressions;

using System.Globalization;

using System.Text;

namespace LLMing

{

public static class AI {

// ..answer

// ..tools

}

}

Word of warning: This approach blindly runs LLM-generated C# code with limited security checks!

If you want to do something similar, please be careful. My framework has some protection (sandboxing, reduced DLL profile, blocked references) but it isn’t guaranteed 100% safe if the LLM had malicious intent.

You/I have zero control over the code generated. It could do anything from spawning a process like PowerShell, and that could end badly. It could delete/create files (including viruses).

We’ll dive into more examples. Has it got your brain buzzing with how easy it is for you to leverage it to do something cool beyond answering beverages? No?

Make a function, explain what it is for, ask the question, and you receive an answer. Give it multiple functions, and it can use them too.

Despite my disdain for LLMs, it doesn’t stop me from feeling that it’s quite cool, even if they can frequently be stupid.