In my previous blog, I showed how a neural network could have 9 inputs containing 0 or 1, and it would return a seven-segment display with a count of the number of 1’s. It was trained to return the individual segments to light for a little more of a challenge.

This one is fundamentally different, that started as a thought experiment extension to the last one.

Electronic circuits can count / store amounts. It’s been done decades ago. One of the approaches is using the JK Flip-Flop in a 4-bit binary synchronous up/down counter. Given scientists think neurons function primarily as electrical signals (in addition to chemicals), why can brains not use a similar technique?7

The fundamental question was can I create the required components using just neural networks?

This posting attempts to answer that. Before anybody comments – yes, I have heard of LSTM and their successor, transformers.

As my “brain” framework isn’t ready to be published, I have stitched the neural circuits together in code. Conceptually the network can have them all tied together without additional code, but today the network I have used doesn’t have any interconnecting class joining individual “parts” of the “brain” together.

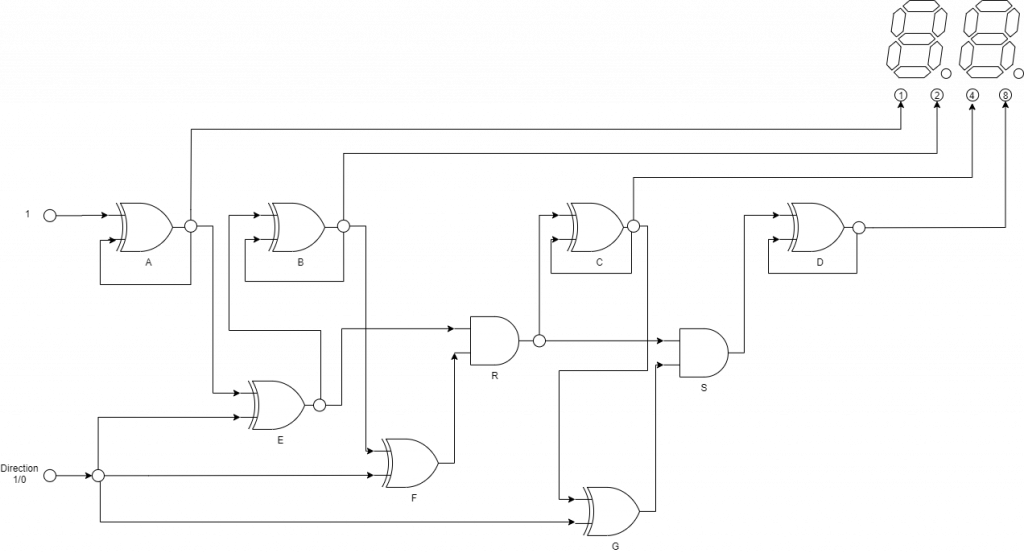

The circuit below (if I’ve drawn it correctly), shows the principles. I am not claiming to be a genius, some might consider it cheating but for those that think the latter, if the brain works on electricity don’t you think that maybe the neurons contain circuitry and connect like circuits? From the infinite monkey theorem there is no reason why neurons cannot connect themselves to create such a setup. I am not however likely to live long enough to see it happen at random via genetic mutation!

I created an XOR perceptron networks for gates A-G, and “AND” perceptron networks for R & S. Making gates used to be challenging before back-propagation. Since then, there has been no fun in them…

Because instead of one network, we have multiple interconnected networks. Each network is specialised (xor/and). I would not be at all surprised if there are basic neural building blocks that are used repeatedly throughout a brain; we already know the brain is made up of multiple neural circuits as science has mapped the purpose behind some of it.

The “design” for such a network would be encoded, as the DNA encodes the brain today. I also wonder (digressing slightly) whether the “junk” DNA in some way is part of the boot-strap code for the brain. Given we mostly have similar regions in all our brains despite being different people, there has to be a blueprint somewhere.

Below, we make “components” as per the labels above.

internal Counter()

{

_ = new XOR(

name: "A",

inputs: new string[] { "JA", "QA" },

outputs: new string[] { "QA" });

_ = new XOR(

name: "B",

inputs: new string[] { "JB", "QB" },

outputs: new string[] { "QB" });

_ = new XOR(

name: "C",

inputs: new string[] { "JC", "QC" },

outputs: new string[] { "QC" });

_ = new XOR(

name: "D",

inputs: new string[] { "JD", "QD" },

outputs: new string[] { "QD" });

_ = new AND(

name: "R",

inputs: new string[] { "1", "2" },

outputs: new string[] { "OUTPUT" });

_ = new AND(

name: "S",

inputs: new string[] { "1", "2" },

outputs: new string[] { "OUTPUT" });

_ = new XOR(

name: "E",

inputs: new string[] { "QA", "DIRECTION" },

outputs: new string[] { "OUTPUT" });

_ = new XOR(

name: "F",

inputs: new string[] { "QB", "DIRECTION" },

outputs: new string[] { "OUTPUT" });

_ = new XOR(

name: "G",

inputs: new string[] { "QC", "DIRECTION" },

outputs: new string[] { "OUTPUT" });

}

When I get around to sharing my brain creator, we won’t need to do it in code. I’ve adapted my “usual” neural network code to enable referencing neurons by name. The order is important in that the steps have a dependency, as the value of one feeds into the next.

/// <summary>

/// Updates our neural network.

/// The effect is rippled down thru each network.

/// </summary>

/// <param name="direction"></param>

/// <returns></returns>

internal int Feedback(int direction)

{

// A

NeuralNetwork.SetValue("A:INPUT:JA", 1);

NeuralNetwork.SetValue("A:INPUT:QA", NeuralNetwork.GetValue("A:OUTPUT:QA"));

NeuralNetwork.networks["A"].FeedForward();

NeuralNetwork.SetValue("E:INPUT:QA", NeuralNetwork.GetValue("A:OUTPUT:QA"));

NeuralNetwork.SetValue("E:INPUT:DIRECTION", direction);

NeuralNetwork.networks["E"].FeedForward();

// B

NeuralNetwork.SetValue("B:INPUT:JB", NeuralNetwork.GetValue("E:OUTPUT:OUTPUT"));

NeuralNetwork.SetValue("B:INPUT:QB", NeuralNetwork.GetValue("B:OUTPUT:QB"));

NeuralNetwork.networks["B"].FeedForward();

NeuralNetwork.SetValue("F:INPUT:QB", NeuralNetwork.GetValue("B:OUTPUT:QB"));

NeuralNetwork.SetValue("F:INPUT:DIRECTION", direction);

NeuralNetwork.networks["F"].FeedForward();

// R

NeuralNetwork.SetValue("R:INPUT:1", NeuralNetwork.GetValue("E:OUTPUT:OUTPUT"));

NeuralNetwork.SetValue("R:INPUT:2", NeuralNetwork.GetValue("F:OUTPUT:OUTPUT"));

NeuralNetwork.networks["R"].FeedForward();

// C

NeuralNetwork.SetValue("C:INPUT:JC", NeuralNetwork.GetValue("R:OUTPUT:OUTPUT"));

NeuralNetwork.SetValue("C:INPUT:QC", NeuralNetwork.GetValue("C:OUTPUT:QC"));

NeuralNetwork.networks["C"].FeedForward();

NeuralNetwork.SetValue("G:INPUT:QC", NeuralNetwork.GetValue("C:OUTPUT:QC"));

NeuralNetwork.SetValue("G:INPUT:DIRECTION", direction);

NeuralNetwork.networks["G"].FeedForward();

// S:

NeuralNetwork.SetValue("S:INPUT:1", NeuralNetwork.GetValue("R:OUTPUT:OUTPUT"));

NeuralNetwork.SetValue("S:INPUT:2", NeuralNetwork.GetValue("G:OUTPUT:OUTPUT"));

NeuralNetwork.networks["S"].FeedForward();

// D

NeuralNetwork.SetValue("D:INPUT:JD", NeuralNetwork.GetValue("S:OUTPUT:OUTPUT"));

NeuralNetwork.SetValue("D:INPUT:QD", NeuralNetwork.GetValue("D:OUTPUT:QD"));

NeuralNetwork.networks["D"].FeedForward();

double[] output = new double[] {

NeuralNetwork.GetValue("D:OUTPUT:QD"),

NeuralNetwork.GetValue("C:OUTPUT:QC"),

NeuralNetwork.GetValue("B:OUTPUT:QB"),

NeuralNetwork.GetValue("A:OUTPUT:QA")};

lastValue = BinaryToInt(output);

return lastValue;

}

SetValue() is used to push values into the “input” neurons, and GetValue() retrieves the output of the last one.

The “direction” controls whether the neural network feedback increments or decrements. It’s invoked as follows. I have re-used my 7-segment display class to draw them. When it reaches 15 or 0, we flip our increment flag (so it goes 0,1…15.14.13.12..0, repeat).

counter.Feedback(increment ? 0 : 1); // display digit (0/1) representing 10s pictureBox7SegmentDisplayDigits10to15.Image?.Dispose(); pictureBox7SegmentDisplayDigits10to15.Image = SevenSegmentDisplay.Output(counter.Value / 10); // display digit (0-9) pictureBox7SegmentDisplayDigits0to9.Image?.Dispose(); pictureBox7SegmentDisplayDigits0to9.Image = SevenSegmentDisplay.Output(counter.Value % 10); // switch direction, otherwise the counter will wrap around. if (counter.Value == 15 || counter.Value == 0) increment = !increment;

The XOR and AND classes each have 2 inputs, 2 hidden and 1 output, all using TANH. We simply push thru the permutations of input (4 of them), with the desired output (1/0).

What one gains from this experiment is still TBD. Brains have short-term memory, e.g. if someone shouts out 2 numbers and asks you to add them, it wouldn’t work without some form of memory. It seems a lot of neurons (45) to increment/decrement but an extra 15 neurons double the number that it can increment/decrement. Borrowing from patterns used in electronics one can make neurons achieve multiplication, addition and more.

The code is available on GitHub.

Has it given you any new ideas? Please let me know in the comments.