After watching in awe at Falcon 9 landing upright, I thought this sounds like a fun challenge. I don’t yet have all the big bucks to buy the real thing, so I settled for something more virtual.

I have nothing but respect for the Falcon 9 engineers, and this guy who made a DIY version which I watched recently.

The Goal

Have ML learn to rotate a rocket that starts horizontal into a vertical attitude, and use thrusters to get to its designated landing point and then gently land the rocket without running out of fuel. Let’s do it in style!

If you’re too excited, as always the code is on GitHub.

De-orbit rotate

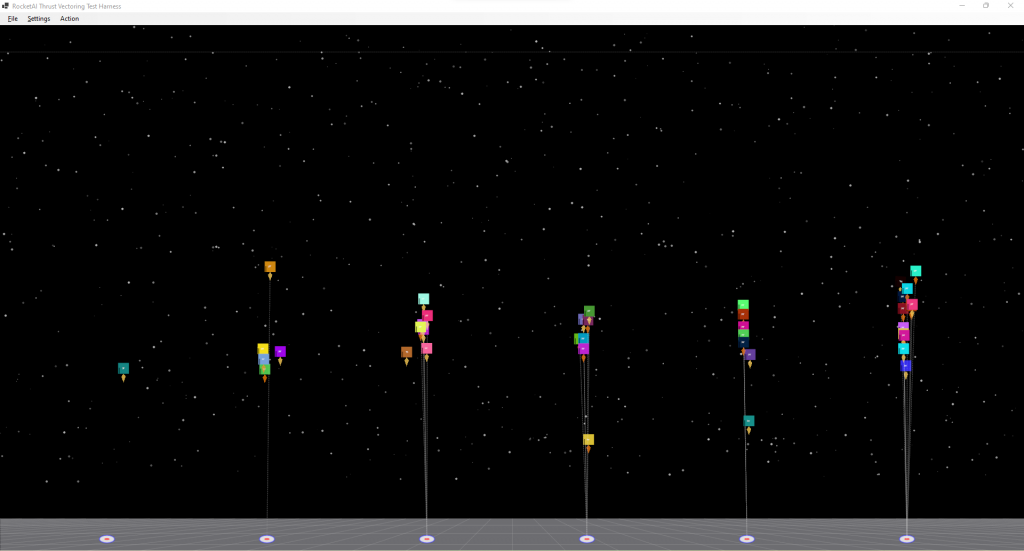

They all trained to prefer upright, so upon being put horizontal they upright themselves by thrust vectoring. You’ll notice the size of the flames as they arrest their motion. Some drop quicker than others. They turn into ghost blocks if they violate the rules (too much lateral speed, too much vertical speed, going upwards).

During their early generations, they perform poorly (the image above is after some training), none will land at that training level and some will even choose to go into orbit. Generations later they settle on a neuron configuration that enables the rockets to lower themselves down gently, eventually landing, and generations later, landing on target.

Here the rockets are beginning to line up neatly. The dotted lines from target to rocket are present whilst they are above a certain altitude to indicate where the rocket is supposed to land.

Below is their slow descent and not quite perfect accuracy horizontally, but at least it’s on the actual target. The “X” indicates it landed safely without killing the occupants. I might one day make the ones that break the rules explode. Generations later they learn to perfect their positioning.

Disclaimer: The Laws of Physics

This is a simulation to challenge the ML, it isn’t the real world, it’s 2 dimensional for a start (ignoring time) and unless I’m mistaken it’s therefore lacking at least one dimension.

Despite a further education in Physics plus Pure & Applied Mathematics, I do not claim that it uses accurate physics; it was a “few” years ago. Unless you are working on a real rocket, it’s not important. This is about proving we can teach it to do the task in the simulated world.

If you wish to perfect the physics model, please do so, and post a comment. I’d be happy to post your experience and give you credit.

Drawing Rockets and The World

You’re here for the AI/ML, but if you want to understand how we animate and draw the rocket, look at this post.

Configuring the neural network (Percepton FeedForward, Genetic Mutation)

Inputs: (7)

- lateral acceleration

- vertical acceleration

- angle of rocket

- lateral velocity

- vertical velocity

- altitude

- landing zone offset indicator

Hidden layer: (7)

- 7 neurons; 1 per input

Outputs: (2)

- burn force (how much thrust to fire)

- angle of thrust gimbal (min/max: +/-45 degrees)

Using the neural network

The first generation given the initial random weightings in the network are very unlikely to do anything except make you laugh. So the app goes into “quiet” mode (toggle using “Q” key) for the first 100. Even after 100, it’s not likely to be even close to landing. Sometimes it takes 200+ generations (which is seconds on my computer).

If you want to zoom ahead with training, press Q. As it gets better the speed at which generations increase will slow, this is a sign the rockets are taking longer before being discarded. Press Q again to get the visuals back. Repeat as necessary.

Occasionally you may find the initial weightings are so poor, it doesn’t seem to be landing. In that event, re-run the app.

With virtual “sensors”, the rocket decides to fire the thruster at a specific angle. Thereby moving it to line up with the landing target, slowing descent to avoid over speed. What we don’t tell the ML is how much fuel despite the fact the rocket gets lighter as it burns fuel.

This is where as a designer of control systems the important question is what data would I need to do the job successfully myself? Before you shout it out, a viewing window at the bottom isn’t the right answer – the fire from the thruster tends to obscure your view and melt the glass. A cup half filled with coloured liquid (as an artificial horizon) sitting next to the controls would work after de-orbit rotation, but by that point, you’ve tipped the contents over your hopefully waterproof controls. I mean think about what data is relevant to the task.

Velocity and acceleration are something humans believe they can sense reliably. Sure being pushed back in your seat in a fast car, and having your face slammed sideways into a window can be felt. But disorientation occurs and numerous pilots have died from the incorrect actions taken resulting from a lack of visual cues.

I don’t think landing rockets vertically is a job for a human without a computer doing most of the work – we just don’t react fast enough for the variable conditions (like a wind that can topple a tall rocket).

Building a control system for a rocket is possibly easier without ML. For example, if the rate of descent is too rapid, ensure the rotation is correct and fire the thruster. If you’re off course rotate the gimbal to the side furthest and give it a small burst. Want to avoid going upwards, reduce your thrust so the vertical speed changes to downwards; be prepared to increase your thrust to stay at the optimum speed.

Alas if I did that it wouldn’t be a neural network. So here are my “inputs” designed to encourage the required behaviour from the rocket.

private double[] GetInputsToLandRocketSafely()

{

// 0 degrees is UP, we want it facing UP. If we don't do this, the rockets tumble.

// -2...+2, represent 8 to -8 degrees

double controlFeedAngleOfRocket = 2 - anglePointingDegrees.Clamp(-8, 8) / 4;

double controlFeedHeight = 2 - (Altitude / RocketSettings.s_maxHeight); // inform it of the altitude

double controlLateralAcceleration = 2 - lateralAcceleration.Clamp(-10, 0) / 5;

double controlVerticalAcceleration = 2 - verticalAcceleration.Clamp(-6, 0) / 3;

double controlLateralVelocity = 2 - lateralVelocity.Clamp(-30, 30) / 15;

double controlVerticalVelocity = -2 * verticalVelocity.Clamp(-3, 1) / 3;

double controlMoveToBase = AISettings.s_fixedBases ? 2 - (targetBase - location.X).Clamp(-20, 20) / 20 : 0; // make it aim for the base

double[] neuralNetworkInput = { controlLateralAcceleration,

controlVerticalAcceleration,

controlFeedAngleOfRocket,

controlLateralVelocity,

controlVerticalVelocity,

controlFeedHeight,

controlMoveToBase };

return neuralNetworkInput;

}

Fitness

With genetic mutation, we need a “scoring” mechanism. I didn’t arrive at this on my first attempt, it took some iterations. There may even be an even better approach. If you come up with something, please let me know in the comments.

The larger positive score indicates the rocket performed better.

If it landed safely (reached the floor at < 5 m/s), we give it a large score (to ensure it ranks higher than others that didn’t land), but we also give it bonus points based on how close it landed to the target landing spot. This encourages accuracy.

If it failed due to going upwards (which is not usually where the ground is found unless upside down), we punish it with the maximum fail value.

We then look for bad behaviour: making the occupants dead or sick, running out of fuel etc. That too is punished with a max fail.

Assuming it wasn’t failed, if it has neared the ground we score it harshly for too much final approach velocity, a g-force that is un-survivable, and lastly knock-off points for not being on target, after all, who wants to walk to the spaceport.

With “climate change” I probably ought to favour ones that use less fuel, feel free to add logic if that matters to you.

internal void UpdateFitness()

{

if (HasLandedSafely)

{

AISettings.s_mutate50pct = false;

NeuralNetwork.s_networks[id].Fitness = 1000000F + (10F + (float)verticalVelocity)

- Math.Abs((float)anglePointingDegrees)

+ 20 - Math.Abs(targetBase - location.X); // 0 degrees = top points, slower landing better

return;

}

// up it goes into space...

if (Altitude > RocketSettings.s_maxHeight)

{

NeuralNetwork.s_networks[id].Fitness = -int.MaxValue; // upwards!

return;

}

float x = Math.Abs((float)verticalVelocity);

if (x > 50) x = 50;

if ((Math.Abs(lateralVelocity) > 20)

|| (verticalVelocity > -0.5)

|| (verticalVelocity > 0)

|| (Math.Abs(lateralVelocity)>20)

|| EliminatedBecauseOf == "offs"

|| EliminatedBecauseOf == "up"

|| EliminatedBecauseOf == "fuel"

|| (RocketSettings.s_maxHeight - Altitude < 500))

{

NeuralNetwork.s_networks[id].Fitness = -int.MaxValue;

return;

}

if (x > 0)

{

float altitudePoints = (float)RocketSettings.s_maxHeight - (float)Altitude;

if (Altitude < 10)

{

// punish for too much gforce

// punish for too much velocity

// punish for being too far from target base

NeuralNetwork.s_networks[id].Fitness = 1000000F / (TooMuchLateralGForce() ? 2 : 1) - 1000

+ (10F + (float)verticalVelocity)

- Math.Abs((float)anglePointingDegrees)

- Math.Abs(targetBase-location.X)*100;

}

NeuralNetwork.s_networks[id].Fitness = altitudePoints * (float)0.5F

/ (float)x * ((verticalVelocity < -20) ? 0.5F : 1)

+ (Altitude < 1000 ? 2000-Math.Abs(targetBase - location.X) : 0);

}

else

NeuralNetwork.s_networks[id].Fitness = -999;

}

Wind

I get enjoyment from testing how far one can challenge the neural network. Wind adds an extra dimension, but don’t be disappointed that although it impacts the rocket when simple left-to-right, it learns to counteract it. It’ll even still land on target. It just “learns” to compensate.

The sick bag would be in demand and fear level inside it would be off the chart if you were unfortunate to be at the mercy of this rocket brain. But please give it a round of applause, it’s taking corrective action and keeping it upright-ish.

You configure the wind in AISettings.cs as follows, an arrow appears on the left or right depending on positive (left-to-right) or negative (right-to-left). Enabling generally takes more generations before success landing.

/// <summary>

/// When not zero, the wind blows the rockets. Sign indicates direction, -ve is right to left.

/// </summary>

internal static float s_maxWindStrength = 0.3f;

/// <summary>

/// Whether the wind is random or constant.

/// </summary>

internal static bool s_variableWindStrengthAndDirection = false;

After thoughts

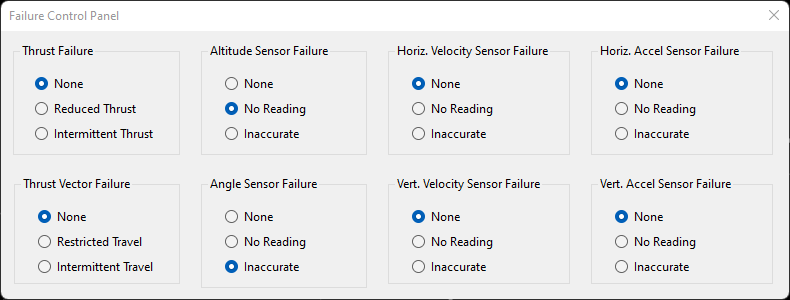

Control systems just do what they have been programmed to do. Most modern jetliners have auto-land and they don’t peer out the window, they just react to sensors. All very cool except when systems fail and a master caution light illuminates followed by a loud warning and the auto-pilot disengages (either completely or partially) and the human gets the stressful job of avoiding everyone dying.

I mention that because it’s crucial to recognise that during learning it is destroying (virtually) lots of rockets and their occupants, we’re actually training for a “happy” path where all the sensors work. Pilots train for most eventualities, but having watched too much “Aircraft Investigation”, sometimes not enough or at all for the ones that killed them. Even so, there have been incredible skilful moments where they’ve used billions of neurons to improvise. e.g. the incredible Captain Sully Sullenberger after the loss of both engines shortly after take off. Astronauts (I imagine) do a serious amount of training far in excess of a regular pilot; not for every eventuality such as on Apollo 13; depending on ingenious creative solutions.

What we haven’t tried in all this is to train for all eventualities.

A thought question to the reader is how one trains an AI for all eventualities.

The ML gets good at solving the “equation” for the inputs. This is training using linear regression in multi-dimensional space. But when the inputs are lost (sensor failure), the equation dramatically changes.

For example, you would possibly survive a landing if the rocket angle is tilted as long as the vertical velocity is very low. The compensation being a reduced rate of fall; the problem is that the burn angle has dependency on the direction the rocket is pointing. You could infer/approximate over time (assuming the failure is at a high altitude) the angle by staring at the lateral and vertical velocity long enough. That requires more AI than a 7 neuron setup.

Failure of thrusters, where the gimbal develops a fault where it will only move asymmetrically (-45..1 degree). Whilst you’d need to be strapped in, technically you can spin the rocket 350 degrees (to rotate 10 the opposite way). Sick bags are provided on rockets, so we have that covered, but please remove your helmet.

Here’s intermittent thrusters in action, the easy one to compensate for – it just burns more to compensate and still lands; albeit chucking the thruster gimbal around to correct for unreliable vectoring.

Lastly the “knowing” sensors have failed is an art in itself. If it fails to provide a reading it probably is broken. But what if the reading is wrong. An extremely expensive lesson of “don’t re-calibrate if you don’t know the reason for it“.

That’s where you get into do I employ multiple sensors and a voting system to attempt to choose the correct ones? (Like they did in the shuttle, in this case having multiple computers).

Failure isn’t always fatal and can be dealt with because of the sheer speed of intervention by the computer (e.g. above), sometime it is. This is with “no altitude” and “inaccurate angle sensor”. But it “learnt” without them not it suddenly lost sensors. It makes a huge difference.

Press [F] and fail something, then watch a working neural network go berserk killing all onboard. It’s ok, they aren’t real people.

I mention this not to put you off creating a space rocket with auto-land (although I will be clear that I take no responsibility if you are crazy enough to do so), but because I think AI is currently incapable of such a feat. AI can help in the right circumstances, but it is not yet smart enough to save you from certain death.

I am tempting fate, someone smart at OpenAI or Google will prove me wrong.

Falcon 9 lands fine I hear you say? Yes, but I bet it’s not AI; also it has failed to land upright or on target and that’s without sensor or thruster failure (not to be dismissive, see opening paragraph). You can simulate a lot with modern powerful cloud computing and GPUs, but as any engineer will tell you, doing it in the real world introduces new/unforeseen challenges! Funny enough, lots of things function just fine without AI.

I hope you have as much fun playing with this experiment as I did, and that this post inspired you to try new ideas. If so, please let me know in the comments. Maybe I can feature your cool creation in my blog?