I had a lot of fun with this so am sharing it in the hope you find it interesting and educational.

I like to make frameworks to experiment with and this was one of the first that I created to help solidify my understanding of certain aspects. It provided moments where I sit and wonder before the penny drops at my ignorance.

There are more experiments, some yet to build, and some I don’t have time to publish right now. They are part of a much bigger concept regarding primitive lifeforms and teaching them; that factors in more things and a different more advanced brain (not a plain perceptron).

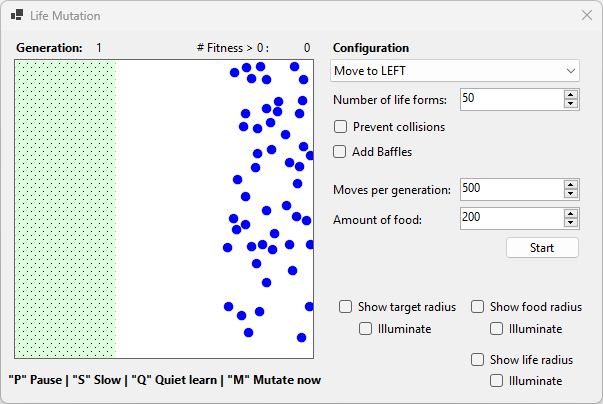

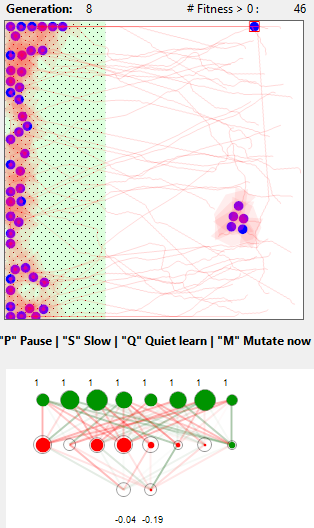

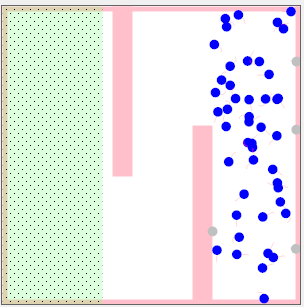

Below is my simple virtual world, with the blue blobs representing my virtual lifeforms.

Game Rules: please all assemble in the green area.

I created a neural network, and they don’t move. Maybe batteries were not included.

If we look at the neural network (which is intentionally small but would be the same with 1000 hidden neurons). The “0” at the top is a concern.

This network has no sensors enabled.

I know you’re probably thinking “Duh, what did you expect?“. Bear with me.

Herein lies the rub, it isn’t a virtual lifeform just because I attached a neural network. The problem with it, hopefully not with you, is the lack of motivation. Without getting into complex sciency stuff, at a basic level the virtual life-form has no reason to move – it lacks the concept of threat, needs/wants/desires.

All lifeforms do things because of them, be it for self-preservation, avoiding pain, resolving hunger, reproduction, greed etc. Even single-celled organisms with seemingly random movements do so for food.

That segues into the second problem – communicating our desires to get them to do what we want. Rewards don’t help if you have no concept of the benefit of receiving them. Knowing there is a reward at the end of the journey is no use if you don’t know where the “end” is, or what you have to do to get to the “end”.

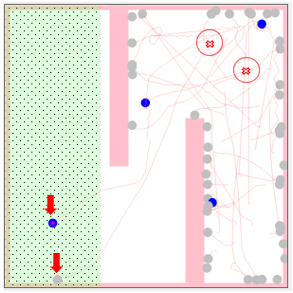

Here they are playing the game, how did I fix it?

Did I magically give them desire? Not really.

This code below is identical to the stationary life forms. But as you saw above, the output was always 0 + 0. They had no desire to move.

// ask the AI what to do next? inputs[] => feedforward => outputs[] // [0] = x offset from current location // [1] = y offset from current location DesiredPosition.X = (float)(Location.X + outputFromNeuralNetwork[0] * 300); DesiredPosition.Y = (float)(Location.Y + outputFromNeuralNetwork[1] * 300); float angleInDegrees = (float)Utils.RadiansInDegrees((float)Math.Atan2(DesiredPosition.Y - Location.Y, DesiredPosition.X - Location.X)); float deltaAngle = Math.Abs(angleInDegrees - AngleInDegrees).Clamp(0, 30); // quickest way to get from current angle to new angle turning the optimal direction float angleInOptimalDirection = (angleInDegrees - AngleInDegrees + 540f) % 360 - 180f; // limit max of 30 degrees AngleInDegrees = Utils.Clamp360(AngleInDegrees + deltaAngle * Math.Sign(angleInOptimalDirection)); // close the distance as quickly as possible but without the dog going faster than it should speed = Utils.DistanceBetweenTwoPoints(Location, DesiredPosition).Clamp(-2, 2);

So what’s different? The neural network before received 0 as the input.

Given perception networks are weighting x neuron value + bias, 0 x anything = 0. You’re thinking but I am forgetting the “Bias”. No. One of the articles that I read said for a TanH activation function the optimum initial bias is 0 and that adjusts with training. Thus weighting x 0 + 0 = 0. The mutation will make it non-zero. It was probably a dumb thing to do for a mutational network.

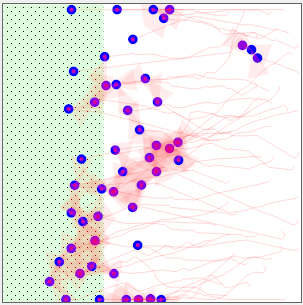

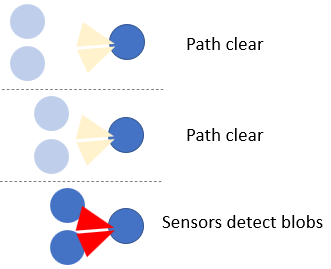

What has made the outputs non-zero is the enabling of the collision sensors as seen below. They are simple triangles in all directions. A red triangle means it sensed another lifeform.

You can barely make out the sensor coverage (very subtle, polygon surrounding them). It’s visible enough to know it works.

If we click on one of the lifeforms (top right) with no close neighbours, the neural network has “1” indicating safe to move (in each direction).

And the next one has detected lifeforms. Think of the sensor 1/0 as

- 1=ok to head in this direction,

- 0=blocked from moving in this direction

At this point, you’re probably thinking how does a proximity sensor make it move to where we want when they are unrelated? I am glad you wondered, it means you’re paying attention.

You’re right, the collision sensor has nothing to do with the tendency to go to the left.

The movement left happens because of scoring and mutation preferring those that go left. We score the neural networks as follows: if X of lifeform is 0 it scores 200 points, X of 1 it scores 199 points, etc and if X of lifeform > 199 it gets 0. The sensor helps because it ensures that the neural network inputs multiply something to non-zero.

switch (Game)

{

..

case Games.reachLeftSide: // 0..200 gives score (0 most), 201..300 = 0 (centre is at 150px)

fitness = lifeForm.Location.X < 200 ? 200 - lifeForm.Location.X : 0;

break;

...

Lifeforms that get the furthest left are preserved, and those that don’t are discarded. True to life, some perfectly good lifeforms are discarded when they get blocked by a cluster of other lifeforms who refuse to move.

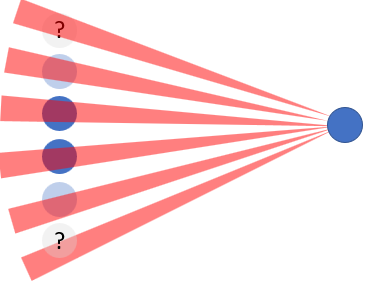

If you’re thinking why don’t they avoid getting blocked? The answer is that avoidance requires luck and route planning. It also depends on how far you can see. In the next example, the lifeform is moving from left to right. It doesn’t see the obstruction ahead.

With a small detection range, what should it do in this instance? Should it go up or down? It could go upwards and find itself unable to find a path because of yet more obstructions. The same could happen in a downward direction. But wait, it gets worse, it now needs to decide between two equal options.

Humans in this quandary would weigh up the directions. When confronted by two equally good choices, they pick one seemingly at random (e.g. heads-n-tails). With two equally bad choices, they will put off the decision until they are left with no choice but to gamble with their gut feeling. The latter isn’t dumb, there are neurons in the gut.

In this particular case, rotating isn’t going to help solve the problem due to the sensor’s range.

You could absolutely increase the sensor range. That would help you spot the gap and deviate sooner. What you’re then up against predicting the action of other lifeforms, and they are the cause of our blockage. They may be heading across your path or into the same gap.

I am not trying to discourage anyone from trying, actually the opposite – play and try to make an AI that is smart enough to do this.

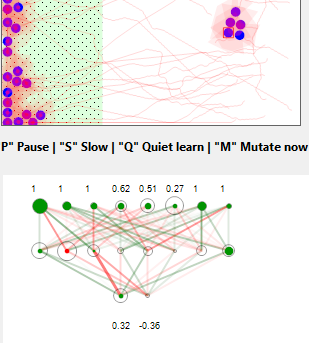

If we remove “0” as a bias, here’s the same experiment with no sensors. Just a single non-functioning input. It works fine. Notice they don’t avoid each other, that’s because we turned off the sensor.

The bias almost always ensures a non-zero output.

If you remember from the code, the two outputs are the “offset” of X and Y. i.e. how much to move the lifeform. The one with a red box moves left and up a little. That’s because each time it’s adding -0.44 to X, and -0.04 to Y. When it reaches the left edge it goes up, because Y still gets -0.04 added (and the X part is ignored).

From mutation, we’ve picked neural networks that always provide a minus X (which happens from the bias). Not magic.

Now, let’s up the ante somewhat by adding an obstruction.

Game Rules: please all assemble on the left, walls are electrified and will kill you.

What do you think will happen?

Maybe worth reiterating, for this to work we have to use wall sensors.

Rarely do any end up in the goal area. Some make it. They tend to be ones that go down and left.

None of them wants to go up, then left, then down, then left.

So what’s going on?

The problem is that they move based on sensors and not because of a reward.

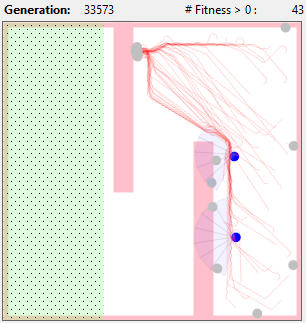

For me, this is the crux. How do I tell the lifeforms what I want them to achieve? After 33,500 generations it has neater more consistent lines (from mutation clone), but no effort to go down.

We’re rewarding it for failure – it gets points from 200px (50px right of centre) to encourage direction. We’re not punishing it for crashing into the pink areas (we could give it a 0). What happens if we reward it with more points for going downward (as an incentive)?

Nothing. It does the same dumb thing.

My observations are:

- being able to see a large distance makes it less likely to dodge walls.

- they all start in different locations. It’s easier starting nearer the top and heading diagonally to the goal. We should focus on the ones that need to learn both problems.

After repeated attempts, I chose to switch the outputs to speed and rotation. Would it have ever worked without doing that? tbh I don’t know. Anyway, I changed the steering: (same idea as many of my apps)

So it’s configured/works like this:

speed = (float)outputFromNeuralNetwork[c_outputSpeedNeuron] * SpeedAmplifier; speed = speed.Clamp(0, 3); AngleInDegrees = (float)outputFromNeuralNetwork[c_outputRotateNeuron] * 360; // 360 = full circle, output of neuron is 0..1

Fitness:

case Games.reachLeftSide: fitness = (180 - lifeForm.Location.X)*1000; if (lifeForm.Location.X < 100) fitness += 1000000; fitness += lifeForm.Location.X < 180 ? 290-lifeForm.Location.Y : 0; break;

Fix the starting position to go roughly bottom right:

case Scoring.Games.reachLeftSide: // rectangle on left 1/3 bounds = new(200, 155, 285-195, 285-160); break;

Set the sensor to be quite small.

double DepthOfVisionInPixels = 20;

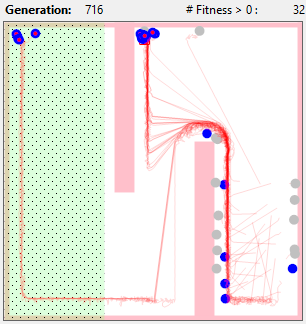

Yay, it did it, tbh I am surprised as you. Here it is in action to prove I didn’t just PhotoShop it:

Over time, because we are scoring the top left higher, the ones in the middle will decrease, and the ones in the top left will replace them. It does that because of mutation and scoring. The left gives significantly more points to reach the left.

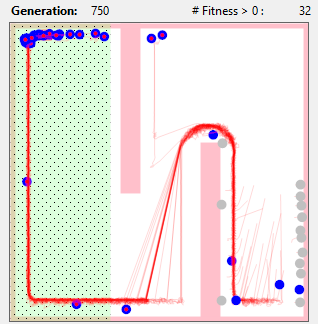

By 27 generations later you can see that happen:

How big is the neural network then?

n sensors + 2 outputs. Oh, that’s linear.

It’s worth being mindful, with mutation we are copying the top 50% to the bottom 50% and mutating the latter. Some will not work better, but will actually work worse. That’s not a flaw, but a reality of mutation.

Watch the one with a square as it reaches the top. It’s thumping out GREEN in output neuron 1 (speed). then the front sensors are green and it changes to huge RED to speed output. That’s it hitting the brakes in real-time!

Given enough time, and the right scoring/network it achieves success at the “game”.

The major difference is the control mechanism. At all times it is tracking the edge. That’s why it’s successful.

Could I have built something to solve this particular problem without AI? Absolutely, with far less effort. But it was fun!

On page 2 we try a different game.