Game Rules: all life forms, eat as much as you want.

This one gave me immense frustration (think several weeks to make it work) and therefore a lot of head-scratching.

For this make sure you set these in the Config.cs.

internal static bool MultipleDirectionOutputNeurons = false; internal static bool MoveToDesiredPoint = true;

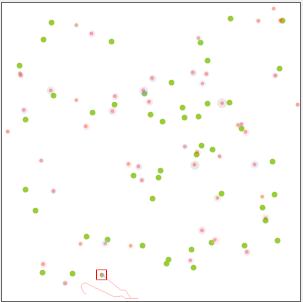

The idea is simple. You have blobs, and they wander around eating as many food dots (green) as they can. Below the one with a square is the blob I am “watching”. UI-wise the first hurdle is how you represent competing lifeform blobs. They all start in a random place, to ensure they are not learning to do from a specific location (e.g. always upwards). With each gobbling their own food, how can I visualise what one particular blob did?

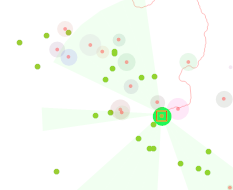

I came up with what I think works well. You click on the lifeform, and it shows that lifeform along with their path and the food disappearing as they gobble.

Green = food. Blobs are 4px growing as they eat. All blobs are visible, but the one being watched has a red square and is more prominent.

Here’s it in action, as you can see it is hoovering up the food. It stops after 500 moves (because I set that limit). I was so pleased when I realised this actually works. The initial build was to have lifeforms competing. Whilst it was my goal, the problem was that it was hard to be sure it worked.

Why is this cool? It’s sensing multiple food items and heading towards them. Given the wiggly lines, It isn’t the shortest route, but nonetheless, it is doing something clever. What’s more, this neural network is Input > Output (linear) with NO hidden neurons!

The code for this is on GitHub.

It’s important to realise that as complexity in the environment comes into play, basic algorithms fall apart (AI or not). I don’t think an AI can be a single neural network but split into multiple task-specific networks managed by a higher-functioning network in the same way the brain has areas with very specific functions all connected.

For example, if we changed the food task to have 2 competing priorities by introducing a predator, we have functions for avoidance and finding food. It’s no good finding food if you are promptly eaten!

i.e. you weigh up the rewards of your actions (or consequence), whilst at the same time trying to second-guess free-thinking lifeforms within your immediate environment. Some of those are dangerous, some cooperate or at least leave you alone.

I guess this is where the idea for reinforcement learning (RL) came from – life is about rewards vs. consequences vs. nothing.

Life can be distilled into sequential decisions that cannot be rewound nor try alternative paths. Some are serious (like the death of you or others) so real-life learning needs to be extremely efficient, and accurate. Many decisions are too complex to predict accurately very far ahead.

Reinforced learning is an amazing idea when used correctly, but with every decision that has an impact, there are more possibilities. If you can restrict the possibilities, it works well. I am in awe of some of the things I’ve seen it used for.

I just don’t think RL is the way forward. It’s a too inefficient way to learn.

That being said if someone wants to replace my dumb lifeforms with RL and it works much better, please let me know.

I hope you enjoyed this post, and it has given you more curiosity. Please let me know in the comments. If folks show enough interest I’ll add predators and avoidance.