If you have read my other posts, I generally use one of two approaches to learning. It’s not that I couldn’t expand, my goal is to have fun and ensure I deeply understand something before moving to the next level or as often turns out a fad.

So we can backpropagate our sheep, no funny jokes please, or we can mutate them (not making them green or strange deformities) using the bias/weighting mutation.

Having tried both, the disobedience of my sheep forced me to re-think. It’s like they have a mind of their own, yet the virtual sheep don’t have a neural network.

This experiment taught me a lot about limitations and quite frankly dumbness of perceptron networks and the unforgiving laws of chance. I didn’t realise the computational power it probably needed if I wanted perfection. I honestly thought this would work like Pong 3.0 – you herd the sheep a few times, and it learns.

Let’s start with the “easier” one – backpropagation.

We first create training data containing inputs and desired output. Then repeatedly push the inputs and output into the neural network via backpropagation until the error between the neural network output and desired output for all points is sufficiently accurate.

Ooh, you’re thinking Dave has finally used a “real” neural network that has multiple layers. I know, for once the problem actually seemed to need it.

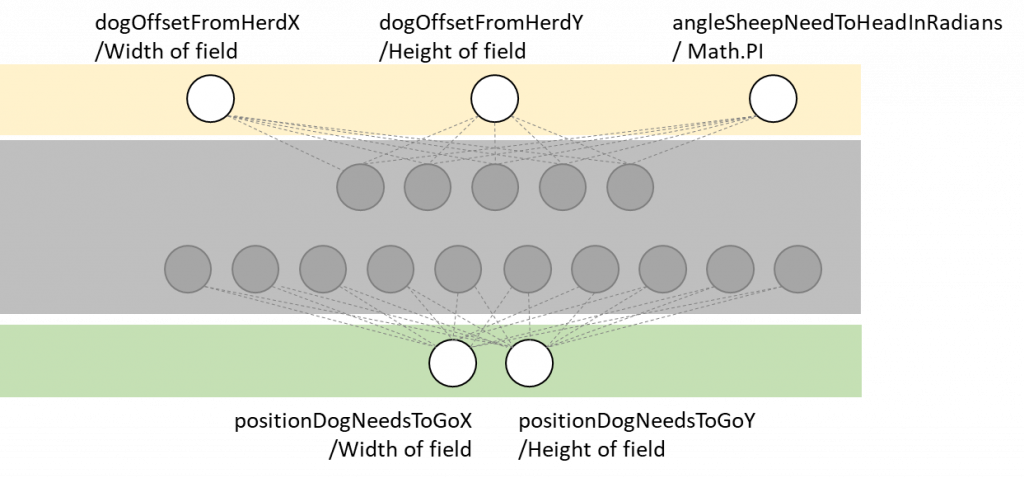

INPUT: 3

HIDDEN: 5, 10 (2 layers)

OUTPUT: 2

I don’t know what the sweet spot might be in terms of hidden neurons. Time to train has as much a part in that. I don’t have the time to spend hours trying different combinations.

You’re maybe thinking if you know exactly the right data why do you need AI? Well, I don’t – I already demonstrated it working with heuristics. Let’s see how backpropagation AI works in practice. It managed all but one.

Here’s the full run of another:

Ok, it missed a couple of sheep, but pretty cool?

Remember it works because the dog knows where the sheep need to be. No whistling is required – the reminder waypoint little blobs are enough.

Some courses highlight limitations in the algorithm. This one is fine for all but the last bit. It goes wrong because at the bottom of the inner loop it cannot get below the sheep, so it drives through them. That starts a fatal split.

I hope you still like it.

Can I do better? The first time, nope. I made a pig’s ear of it. But the second time, I did “ok”.

Try it. I was relieved that I could do better than my AI. But I still didn’t manage all of them.

This was taught using the algorithm we covered previously.

That translates as follows:

private static void ComputePointOnTheSheepCircleThatTheDogNeedsToGoTo(float sheepRadius, float arc, PointF centreOfMass, double desiredAngleInRadians, out PointF backwardsPointForLine, out PointF closest)

{

float cosDesiredAngle = (float)Math.Cos(desiredAngleInRadians - Math.PI);

float sinDesiredAngle = (float)Math.Sin(desiredAngleInRadians - Math.PI);

PointF desiredPointAtTheEdgeOfSheepCircle = new((float)(centreOfMass.X + cosDesiredAngle * sheepRadius),

(float)(centreOfMass.Y + sinDesiredAngle * sheepRadius));

// away from destination thru CoM

backwardsPointForLine = new PointF((int)(centreOfMass.X + Math.Cos(desiredAngleInRadians - Math.PI) * arc),

(int)(centreOfMass.Y + Math.Sin(desiredAngleInRadians - Math.PI) * arc));

// closest is the point on the line (from opposite side of CoM) to the dog

MathUtils.IsOnLine(centreOfMass, backwardsPointForLine, desiredPointAtTheEdgeOfSheepCircle, out closest);

}

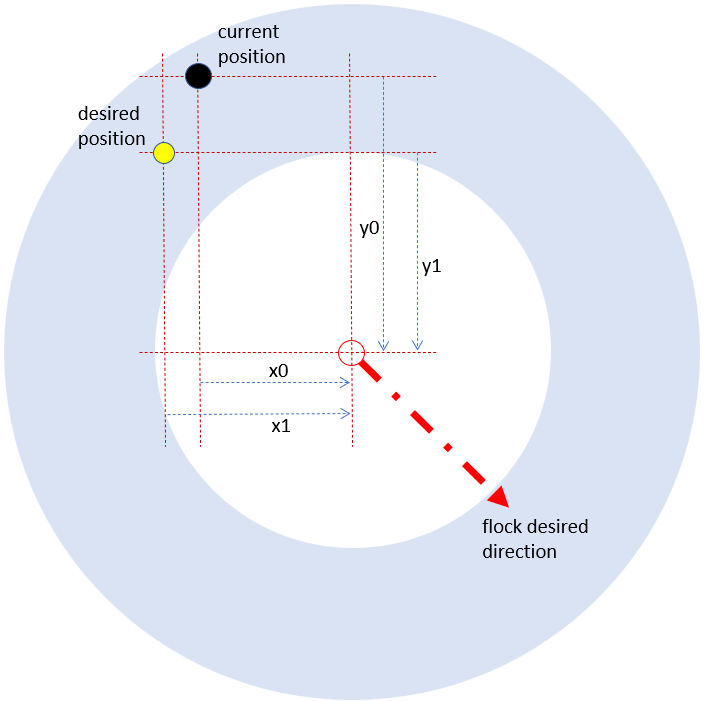

That is turned into an expected output for the neural network using this, we are computing the x1,y1. For it to be 0..1, we scale it based on the width and height of the sheep field.

double[] output = new double[] {

(closest.X-centreOfMass.X) / LearnToHerd.s_sizeOfPlayingField.Width,

(closest.Y-centreOfMass.Y) / LearnToHerd.s_sizeOfPlayingField.Height

};

Great, so we can do the maths easily, but we’re missing something. You guessed, something that calls ComputePointOnTheSheepCircleThatTheDogNeedsToGoTo().

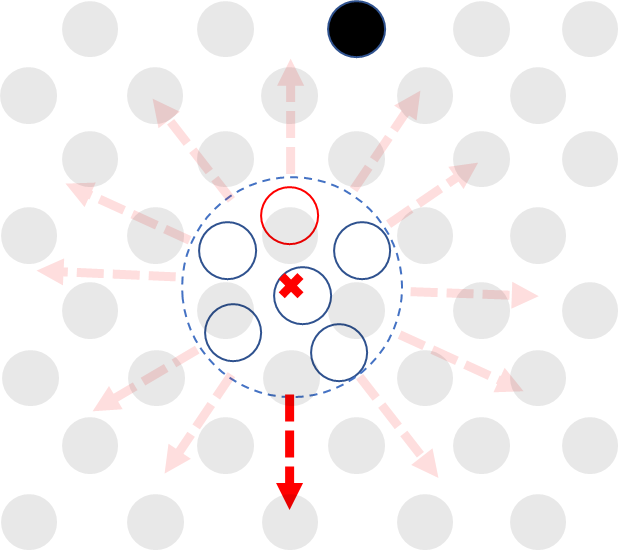

We position the dog at various points around the herd (x and y loop). And we iterate every angle we might want the sheep to go. Black/grey = positions of dog, arrows indicate positions for sheep to move.

public void PlotDogSheepInteractionDogStatic()

{

LearnToHerd.s_sizeOfPlayingField = new Size(300, 300);

InitialiseSheep(out float radius);

radius = Dog.ClosestDogMayIntentionallyGetToSheepMass();

PointF centreOfMass = LearnToHerd.s_flock[0].TrueCentreOfMass();

StreamWriter sw = new(@"D:\TEMP\training.dat");

// for each position of dog, do all the angles the sheep may want to go

for (int desiredAngle = 0; desiredAngle < 360; desiredAngle++)

{

// position the dog around the sheep

for (int x = (int)(centre.X - Config.DogSensorOfSheepVisionDepthOfVisionInPixels + 1); x < (int)(centre.X + Config.DogSensorOfSheepVisionDepthOfVisionInPixels); x += 10)

{

for (int y = (int)(centre.Y - Config.DogSensorOfSheepVisionDepthOfVisionInPixels + 1); y < (int)(centre.Y + Config.DogSensorOfSheepVisionDepthOfVisionInPixels); y += 10)

{

LearnToHerd.s_flock[0].dog.Position = new PointF(x, y);

LearnToHerd.s_flock[0].dog.DesiredPosition = LearnToHerd.s_flock[0].dog.Position;

// point is not within distance circle from CoM (approximation, not for all sheep)

if (MathUtils.DistanceBetweenTwoPoints(LearnToHerd.s_flock[0].dog.Position, centreOfMass) > Config.DogSensorOfSheepVisionDepthOfVisionInPixels) continue;

List<double> inputToAI = new()

{

// sheep dogs know where they are in the field, so we give that to the AI

(centreOfMass.X - LearnToHerd.s_flock[0].dog.Position.X) / LearnToHerd.s_sizeOfPlayingField.Width,

(centreOfMass.Y - LearnToHerd.s_flock[0].dog.Position.Y) / LearnToHerd.s_sizeOfPlayingField.Height

};

float arc = (int)Config.DogSensorOfSheepVisionDepthOfVisionInPixels;

double angleInRads = MathUtils.DegreesInRadians(desiredAngle);

float xDesiredPosition = (float)(centreOfMass.X + Math.Cos(angleInRads) * arc);

float yDesiredPosition = (float)(centreOfMass.Y + Math.Sin(angleInRads) * arc);

LearnToHerd.s_flock[0].DesiredLocation = new PointF(xDesiredPosition, yDesiredPosition);

double desiredAngleInRadians = Math.Atan2((yDesiredPosition - centreOfMass.Y),

(xDesiredPosition - centreOfMass.X));

inputToAI.Add(desiredAngleInRadians / Math.PI);

ComputePointOnTheSheepCircleThatTheDogNeedsToGoTo(radius, arc, centreOfMass, desiredAngleInRadians, out PointF bpl, out PointF closest);

double[] output = new double[] {

(closest.X-centreOfMass.X) / LearnToHerd.s_sizeOfPlayingField.Width,

(closest.Y-centreOfMass.Y) / LearnToHerd.s_sizeOfPlayingField.Height

};

sw.WriteLine($"{string.Join(",", inputToAI)},{string.Join(",", output)}");

}

}

}

sw.Close();

}

The training data written to the file is:

dogOffsetFromHerdX,dogOffsetFromHerdY,angleSheepWantToHeadInRadians,positionDogNeedsToGoX,positionDogNeedsToGoYi.e. where the dog is wrt to the herd currently, where we need to steer the sheep to as the input and where the dog needs to head relative to sheep.

The “trained network” is saved to “c:\temp\sheep0-UHA.ai” (UHA=User, Heuristic, AI).

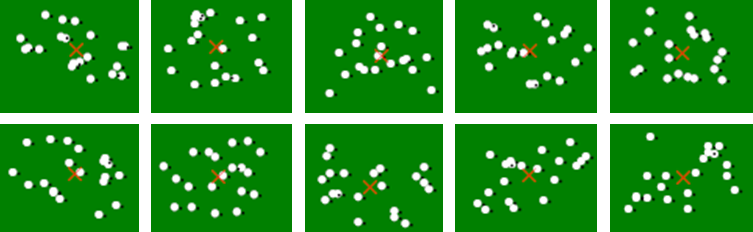

One flaw in this, and here’s where “generalisation” gets messy: InitialiseSheep() is run ONCE, and therefore the positions with respect to the sheep mass could vary slightly with different herds. Here is the result of 10 runs, you can see what the AI has to contend with. The sheep starting positions are random, so each time the sheep behave slightly differently.

Depending on the sheep, it may not work as well. This time it lost 3 sheep, and then ended up with some going to the left of the pen. The centre of mass is skewed by the stragglers and leads to it not steering soon enough.

Sheep really don’t like being trained. tbh, would you want to repeat something 200,000 times?

My initial belief was that we could seed the AI with an “ok-ish” model, and refine it with manual training (copy the user). That has some fundamental flaws – (1) a human is less likely to position the dog in the same place as AI, and there is no guarantee they don’t make a mess of it (2) a human would get bored before they provided enough data points.

Using generated training data, I wondered at first if they would ever train via back-propagation – it took that long (hours). I tried to use the calculation from back-propagation to determine when it is soon enough to test output vs. expected. This was necessary because there were 220,000 rows in the training file (360* x * y positions). I even tried training 10, 25, 50 and 100% saving the neural network at each point. i.e. it has to solve the data for 10%, then we add another 15% and let it find the solution until we’ve covered all.

To create that data, go into the Test Explorer and run PlotDogSheepInteractionDogAngGenerateTestData(). Uncomment the 2 #define’s, esp. the 2nd one (if you want the data!). The first setting is because I have learnt my lesson – draw each training point to a .PNG via Bitmap to ensure the code isn’t flawed. Imagine waiting 2 hours and finding the calculation is nonsense.

#define drawingSteps #define writeTrainingData

If we want to train it sufficiently well to handle all flocks, we’d need to create the training data for a number of different random flocks. Given it is 220,000 rows of data already, 10 flocks (which is nothing in the randomness), we’d backpropagate 2mn. I think if I were to consider that, it’s time to use GPU code.

Here’s course 3, which is too difficult because the fences are too close, and damage is caused early on by the unavoidable separation of sheep.

If the sheep get split up too much it is game over. The centre of mass (the average position of all sheep) ends up in the middle which is ineffective for steering them.

Course Selection

In Form1, you can choose between 3 courses. If you want to add more, create a class that implements ICourse.

/// <summary> /// Enable different courses. /// </summary> readonly ICourse Course = new Course1();

Competition?

Just because I could, I have provided all 3 – user, heuristics and AI all competing with the same initial positioned flocks.

The code for this is on Github.

On page 4, we’ll continue with AI but use mutation to learn.