I promised to explain an alternate way to train the virtual dogs, using mutation.

Until you’ve tried, it’s easy to think this should be fairly routine given my cars happily whizz around tracks using mutation to train despite throwing physics at them. Why can’t AI/ML work out the technique with enough generations?

In reality, I spent weeks getting this right. I had a number of problems, some of my own making, and some venturing into slightly new territory. I also wanted to up the ante a little. Along the way were lots of failures. Never get disheartened when things just don’t seem to work.

Understanding the environment

You could simulate sheep behaviour, dog and progress without any UI after all, behind the scenes is a number of calculations and data points. That’s no fun, and we’re here about combining AI/ML with fun.

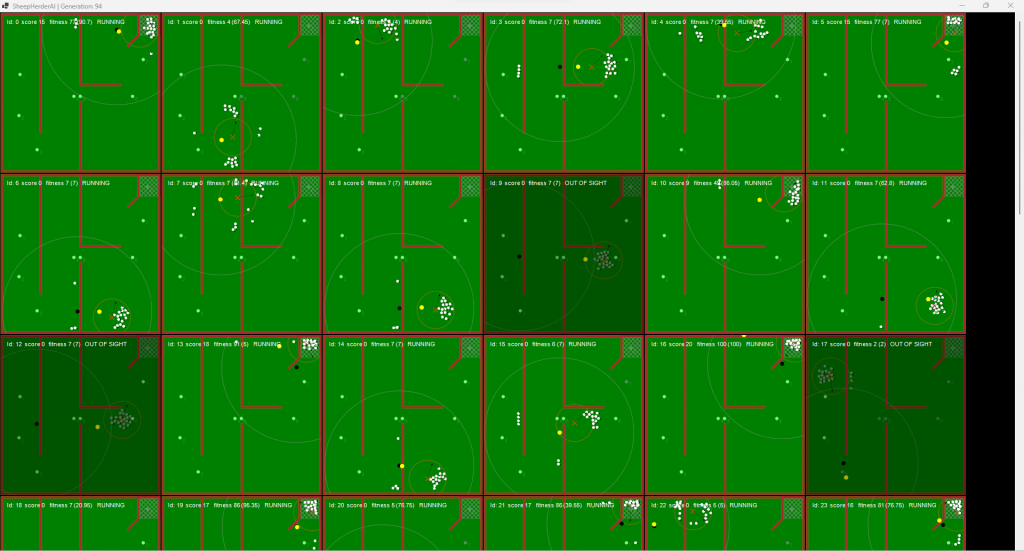

My previous “all cars” on a single-track approach isn’t practical when it’s flocks of sheep. It would be hugely confusing having lots of dots for dogs, with their own flocks all munged into one image. We could colour the flocks and dogs differently. However, I went for the slower but I think better approach – each dog and flock gets their own little minigame.

It’s nothing fancy, just a flow-layout panel containing PictureBox’s. Each flock can render itself with the associated dog onto an image and place it in the picture box.

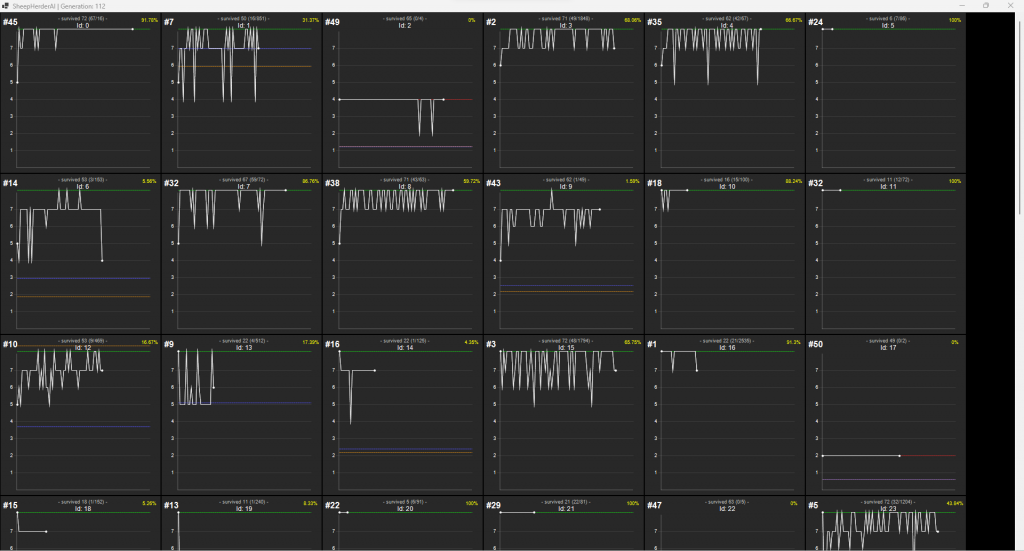

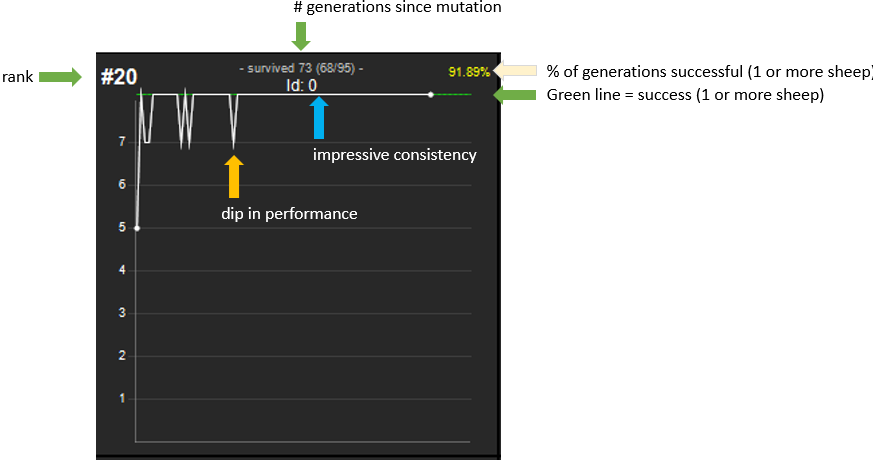

Animating 50 dog+sheep will not going to lead to a very performant solution, so as usual, the app supports “quiet” learning (press [Q]), and the only thing it paints is a graph for each dog showing how well it did in each generation. On my laptop watching the sheep move surprisingly isn’t as painful as I expected.

These are the very same PictureBox’s, it just renders a graph instead.

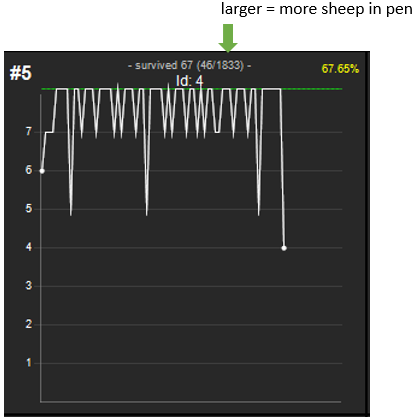

Before we dig into the details, we ought to explain the main parts of the graph:

The one below is more erratic but ranks better which sounds a tad wrong. The important metric is pointed to by the arrow. This particular score indicates a large number of sheep in the pen. In the previous screenshot, it was consistent but not great scoring, i.e. it put one sheep or more sheep in the pen, but not very many.

We haven’t discussed collision detection, and there is a lot of it, from sheep to fences/walls. The sheep is an easy one, but the fences deserve maybe a little mention.

They are groups of lines. So we iterate over each line and use two methods IsOnLine() and DistanceBetweenTwoPoints(). The first method computes where the closest point would be on the line to the point in question. The second is the distance from that point. If it’s within 9 pixels, the point is close to the line. The formula behind IsOnLine is an age-old mathematical one. In the case of the sheep, we back them off when we detect it.

// collision with any walls?

foreach (PointF[] points in LearnToHerd.s_lines)

{

for (int i = 0; i < points.Length - 1; i++) // -1, because we're doing line "i" to "i+1"

{

PointF point1 = points[i];

PointF point2 = points[i + 1];

// touched wall? returns the closest point on the line to the sheep. We check the distance

if (MathUtils.IsOnLine(point1, point2, new PointF(thisSheep.Position.X + separationVector.X, thisSheep.Position.Y + separationVector.Y), out PointF closest)

&& MathUtils.DistanceBetweenTwoPoints(closest, thisSheep.Position) < 9)

{

// yes, need to back off from the wall

separationVector.X -= 2.5f * (closest.X - thisSheep.Position.X);

separationVector.Y -= 2.5f * (closest.Y - thisSheep.Position.Y);

}

}

}

Defining our neural network

It’s a perception network identical to the one we used during back-propagation at least for the above screenshots. Because I knew it worked roughly for the previous one, it seemed a good configuration. It has the same inputs and outputs. To be fair the mutation ends up on par with hours of backpropagation a lot quicker.

This app is a framework designed to allow you to play with input parameters, which I actively encourage. All configuration is in “Configuration/Config.cs”

| Config Property | Description |

|---|---|

| AIHiddenLayers | Defines the layers of the perceptron network. Each value is the number of neurons in the layer. “0” indicates this will get overridden with the required value (#inputs, #outputs). e.g. { 0, 5, 10, 0 } |

| AIactivationFunctions | This defines what activation functions to use. ONE per layer. e.g. { ActivationFunctions.TanH, ActivationFunctions.TanH, ActivationFunctions.TanH, ActivationFunctions.Identity} |

| AIZeroRelativeAnglesInSensors | The problem to solve becomes more complex if one rotates the sensor (0 being direction headed). The dog can handle and remember what 0 means. true – 0 degrees indicates a specific zone (right), rotating clockwise. false – 0 degrees is the direction the dog is pointing. |

| AIusingWallSensors | true – it includes the wall sensor as a neural network input false – the dog isn’t aware of walls/fences, it just gets “stopped” |

| AIBinarySheepSensor | true – sheep sensor number in the quadrant is 1 if sheep are present. false – number of sheep in quadrant / total sheep or if SheepSensorOutputIsDistance==true, then the distance 0..1. |

| AIKnowsAngleRelativeToHerd | true – the AI is given the angle of the dog relative to the herd. |

| AIUseSheepSensor | true – the sheep sensor is used (either binary or distance). false – no sheep sensor is available to AI. |

| AIknowsWhereDogIsOnTheScreen | true – the AI knows its location. false – the AI reacts to sheep and direction, without knowing where it is. |

| AIknowsRelativeDistanceDogToCM | true – the AI knows its location. false – the AI reacts to sheep and direction, without knowing where it is. |

| AIknowsAngleOfDog | true – the AI knows which way the dog is pointing. false – the AI has no idea which direction the dog is pointing. |

| AIknowsDistanceToSheepCenterOfMass | true – the dog knows how close it is to the sheep (without which it may fail “out of sight”). false – the dog reacts to sensors with no real idea if it is close to sheep or not (closeness impacts sheep spread). You would think having no awareness of sheep location in this manner shouldn’t prevent it from achieving the goal, as the “sheep sensor” informs it that it is in range of sheep; however, the sensor when returning the “amount” of sheep or a binary presence doesn’t enable it to know how close. You can compensate by setting “SheepSensorOutputIsDistance”=true |

| AIknowsPositionOfCenterOfMass | true – the AI knows the centre of mass location for the sheep. |

| AISheepSensorOutputIsDistance | If true, the sheep sensor returns a number 0..1 that is proportional to the distance between dog and sheep (scaled based on the depth of vision). |

| AIknowsAngleSheepNeedToHead | true – the AI is told the angle the sheep need to go. This is equivalent to the dog learning the path. false – the AI has no idea what it is attempting, so don’t expect it to guide sheep anywhere. |

| AIknowsAngleSheepAreMoving | true – an extra parameter is given to the AI containing the angle the sheep are moving. false – the dog doesn’t know what angle the sheep are moving. |

| AIknowHowCloseItCanGetToSheep | If true, the AI knows how close to sheep it should get. |

| AIDeriveOptimalPositionForDog | If true, it will decide where it wants to put the dog (x,y) cartesian coordinates. If false, it will rotate the dog and decide an appropriate speed. This appears to work less well, like associating the angle is harder to derive. |

Environmental data that impacts the learning

The results were pretty disappointing before I decided to think what the “formula” might be.

I tried all kinds of sensors and approaches and whilst sometimes it would get sheep in the pen, it was very inconsistent. My experience is that sometimes you don’t necessarily know what is important and what is not until you experiment. For example, I thought more waypoints equate to more accurate herding. Does it? I know directing sheep to the closest waypoint is bad, confusing the dog. Sometimes you need to take a step back and go back to basics.

Here’s it in action, using “sensors”.

And as it pushes them into the sheep pen.

We have a number of “inputs” we can use, the above was using the sensor. You can click on it to turn the sensor on/off. Each sensor returns how far the dog is from the sheep. Because of the nature of the task, that means 360 degrees. You can actually see 2 sensors (the wall sensor on the left). Form1 has a commented-out “#define TestingPointInTriangle”, which when uncommented performs tests of the PtInTriangle() function – because I stupidly spent ages before realising my function was incorrect. I cannot emphasise enough the importance to test things like this.

Achieving 100% accuracy (all sheep every time) is not something that I’ve seen. Each cohort of sheep as demonstrated previously is different in where they start and this causes them to behave a little differently. It doesn’t take much to have them wander left of the pen or get up to other tease-the-dog games.

The problem with solving a different set of parameters is how you rank/score them. Imagine dog 1 got all the sheep in the pen for five consecutive flocks. It then does badly on flock 6, whereas dog 2 has a low prior score but does well with flock 6. Which one do you keep?

There is no perfect answer, although logically I would argue dog 1 ranks higher – because it proved consistent.

If dog 2 had just mutated from dog 1, and so has no prior score and does better than dog 1, then it might end up being the better one, or it might just have been good for this flock. You really need “time” to decide.

Rather than mutating 50% each time, we do it more selectively. In some instances, we wait to ensure the sample size is large enough before considering mutation.

Available hidden keys:

| Key | Use |

|---|---|

| P | Pauses/unpauses everything. |

| F | Framerate, cycles through speeds (slower, then back to normal) |

| S | Saves the neural networks to c:\temp\, these get loaded at startup. |

| Q | Toggle quiet learning mode |

I hope this post inspires you to try to create your own environment and have a virtual life-form play. I know a lot of this kind of problem is very much going in the direction of reinforcement learning. Maybe I’ll add a follow-up in due course.

The source code is in the usual place on GitHub.

If you found this post interesting or have come up with your own awesome project, please let me know in the comments below.