One of the things AI/ML is good at is splitting related things into separate groups. You’ll find lots of such examples on the internet, and although the ones I looked at were relatively uninteresting unless you take enjoyment in staring at numbers.

In an attempt to make the idea more appealing I present my take on the topic.

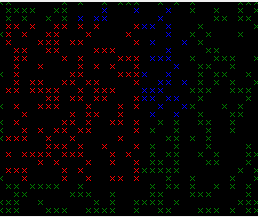

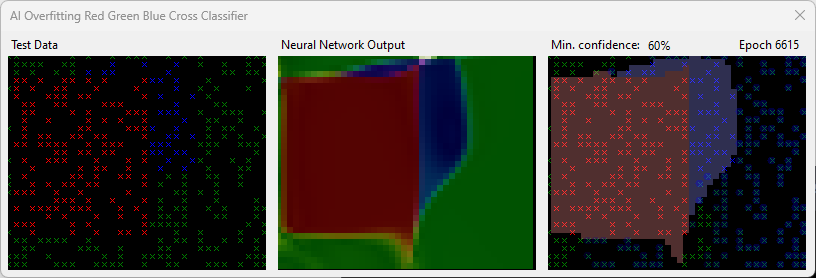

I’ve made a random example (see image) We’ve collected some data let’s call it data points X and Y, with Z being a choice of 3 values. In this case, we have X & Y as coordinates, and Z is red, green or blue.

The data is plotted with the cross colour indicating Z and the location X & Y.

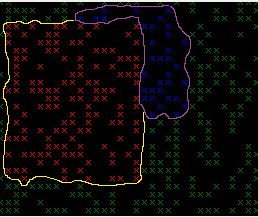

I can see distinct clusters, which I’ve hand-drawn below. So if an x & y appear in an area I marked, the colour is obvious/predictable.

Do you have a formula for me to know the colour? No. But believe it or not, and this is the crazy fun part, you can easily make one!

Using ML, we train it on the data (red, green & blue crosses).

If I now provide it an x & y it hasn’t seen before, it will work out which category it belongs to. This is done using two independent variables, and one dependent because 2d I can draw, 3d is hard to visualise even if you can rotate it.

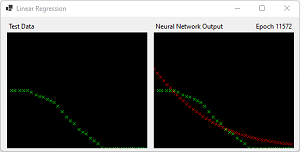

But this trick isn’t limited to 2, you can have lots of dimensions. The number of categories it can split into can be whatever you choose. I chose RGB because it makes plotting it meaningful. In the animation below, you see the neural network output (middle image) hone in on a formula with each epoch. It starts “green” and the regions start to form as back-propagation shapes the weightings/biases. The right-hand image shows the regions based on confidence.

After 6615 epoch’s, you can see it’s done a pretty good job – this took seconds despite animating.

I find that pretty amazing. Despite doing advanced level mathematics education, I would not have realised that with computing power and a simple algorithm come up with a formula!

Defining the neural network (back-propagation, Perceptron, TANH)

This one is simple, and for a change more than connecting the input to the output!

Inputs: 2 (x,y)

Hidden: 2 x 8

Outputs: 3 (red, green, blue)

int[] AIHiddenLayers = new int[] { 2, 8, 8, 3 }; // 2 inputs (x,y) 2x8 hidden, 3 outputs (1=red,1=green,1=blue)

Those of you who follow my posts will notice that I always seem to use TANH activation rather than RELU variants. You’ll also observe my outputs are TANH not IDENTITY (sum + weighting without TANH clipping/curvature). This isn’t out of ignorance, in case it crossed your mind!

I have experimented with several different activation functions, and I like TANH. Maybe I’ll create something without it in a later post.

Training

We turn the coloured crosses into training points as follows.

private void CreateTrainingData()

{

// for every red cross, we add x,y, r=1,g=0,b=0

// for every green cross, we add x,y, r=0,g=1,b=0

// for every blue cross, we add x,y, r=0,g=0,b=1

// The neural network associates that x,y with one of 3 outputs. i.e. classify x,y

// define training data based on points

foreach (Point p in pointsCrossKeyedByColour["red"])

trainingData.Add(new ClassifierTrainingData(x: (float)p.X / width, y: (float)p.Y / height, red: 1, green: 0, blue: 0));

foreach (Point p in pointsCrossKeyedByColour["green"])

trainingData.Add(new ClassifierTrainingData(x: (float)p.X / width, y: (float)p.Y / height, red: 0, green: 1, blue: 0));

foreach (Point p in pointsCrossKeyedByColour["blue"])

trainingData.Add(new ClassifierTrainingData(x: (float)p.X / width, y: (float)p.Y / height, red: 0, green: 0, blue: 1));

}

Each “frame” (tick of the timer), we call “Train()”

private void Train()

{

Random randomPRNG = new(); // we don't need crypto rng for this

int traingDataPoints = trainingData.Count;

// train using random points, rather than sequential.

// WHY? sequential in some circumstances ends up with an unstable back-propagation.

for (int i = 0; i < traingDataPoints; i++)

{

int indexOfTrainingItem = randomPRNG.Next(0, trainingData.Count); // train on random

ClassifierTrainingData d = trainingData[indexOfTrainingItem]; // 5 elements X,Y, R,G,B

network.BackPropagate(inputs: new double[] { d.inputX, d.inputY },

expected: new double[] { d.outputRed, d.outputGreen, d.outputBlue });

}

}

Notice the Random? You are maybe puzzled by that? I found the problem with back-propagating data that is sequential was that it seemed inefficient at finding the optimum bias + weightings.

I plan further investigation into whether the order of data during back-propagation does indeed impact the speed at which it trains, to prove myself right or wrong. I might make that one in a separate post.

Showing the smooth gradient RGB is merely a case of walking down each x,y and asking the neural network for the r,g,b output:

for (int x = 0; x < width; x += step)

{

for (int y = 0; y < height; y += step)

{

double[] result = network.FeedForward(inputs: new double[] { (float)x / width, (float)y / height });

heatMapOfNeuralNetworkOutputForEveryPoint.Add(new int[] {

x, y,

ScaleFloat0to1Into0to255(result[0]), // R

ScaleFloat0to1Into0to255(result[1]), // G

ScaleFloat0to1Into0to255(result[2]) }); // B

}

}

This example shows a practical use of a neural network. I find the fact it is mathematically possible, really cool and that’s why I included it.

You will find the code for it on GitHub

I hope you found this tiny example of what can be achieved an interesting post. Either way, let me know in the comments. If you have a related fun idea you’d like me to write up, please let me know.