If you don’t like thinking about war please go read one of my less close-to-home blog entries.

During the tail end of the last Cold War, I lived next to an air-force base and remember learning what MAD was from an early age.

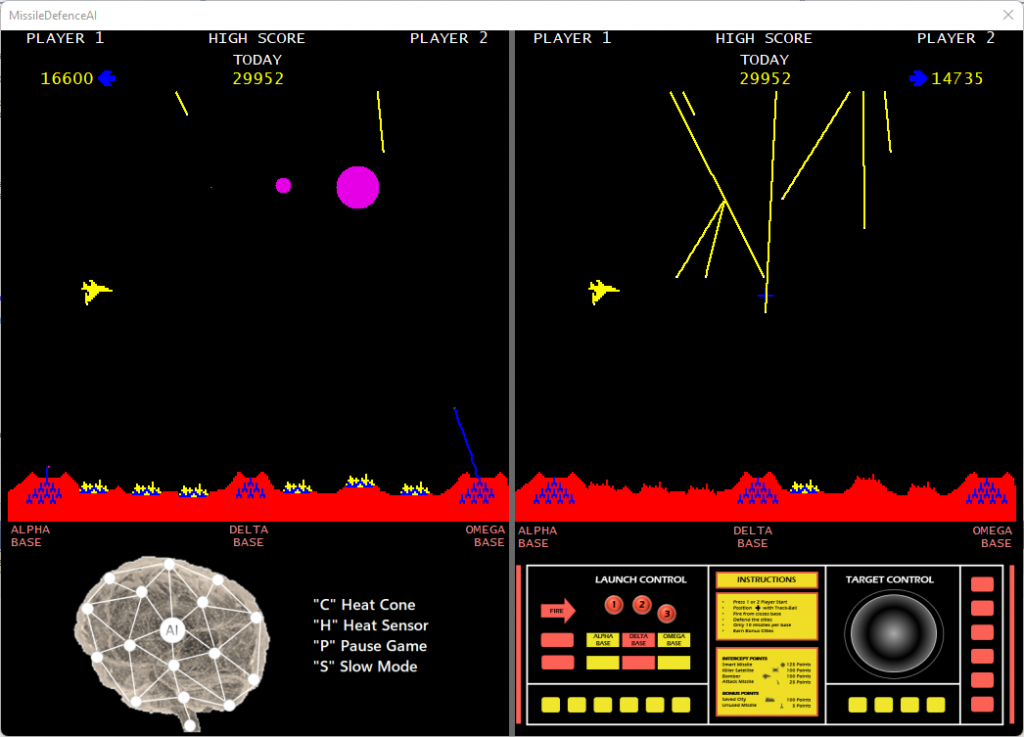

Back in 1980 Atari used that danger to create the addictive game called Missile Command simulating what we all hope will never happen – inbound ICBMs; offering us a chance to save the world with a limited supply of anti-ballistic missiles (ABM) to stop them. The game starts off sedate and before long all hell breaks loose.

Even with aim-fire-forget, as the pressure ramps up before long mistakes are made, ICBMs break thru, and panic leads ultimately to human failure.

In a nod to a childhood favourite, I thought why not attempt ML to guide the missiles akin to a Stingers’ infrared homing to track and destroy planes/helicopters?

If you want to play it (it supports two player simultaneously), you’ll find in GitHub. Don’t expect to beat it!

Please don’t confuse the ability of the computer to win with any form of reinforced learning. This is where I appreciate the strides in that area and I can see how an AI that learns a game is awesome, but I don’t need AI. We simply need machine learning to perform a specific part.

I’d like to shout out to Andy McFadden at https://6502disassembly.com/va-missile-command/ for the superb 6502 disassembly of the original ROMs. It’s helpful knowing the size of the screen, when new bases are awarded, the order missiles disappear etc. I recommend taking a look if you’re curious.

Please note:

I do not claim 100% authenticity in game play – I didn’t use the ROM as the basis for my code (as you will see). For example, it doesn’t implement smart bombs (the annoying things that dodge missiles), primarily because dodging won’t fool the ML.

To make the game more fun…

- the AI is stopped from targeting the “bombers” (so it increases the chance of running out of missiles and lose bases; this occurs as the “banana” shots required after often impossible due to the next point)

- it launches missiles upwards, rather than aim and track preventing them from hitting some lower targets

- both players get the same “wave” of ICBMs which means they have to be pre-computed; whereas the original game looks at what is on screen and decides when to split ICBMs (known as MIRVs).

- the blast radius for the human player is much bigger (so less accuracy required)

- whilst shocking when you get thrashed, the ML was intentionally not trained to be 100% accurate. I’ll explain implementing 100% accurate missiles that handle wind and gravity in a follow-up posting.

Behind The Scenes

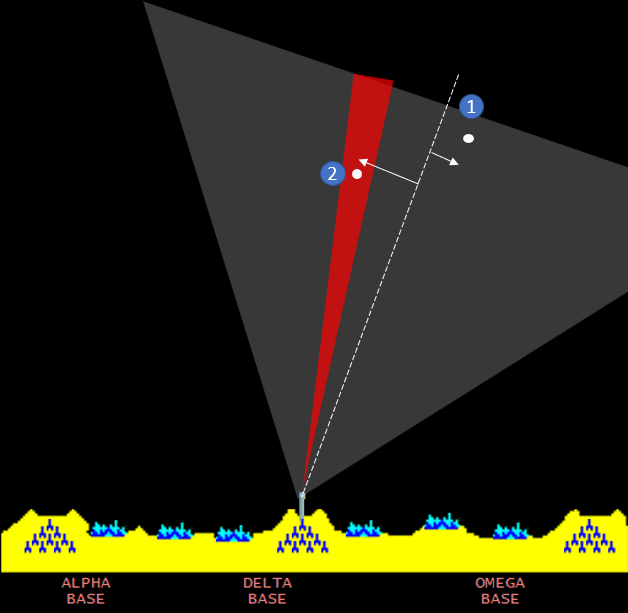

As the blue ABMs streak up the sky you will notice there is a grey triangle, this is the heat “sensor” that the ABM is using to track it’s target.

There is also a red triangle, which you will notice contains the white dots of the ICBM. This indicates which of the sensors has spotted the ICBM.

As it’s running at normal speed it’s hard to fully appreciate that the missiles are curving – remember it is adjusting the ABM in real-time against a moving object (ICBM).

Targeting

You’re probably thinking there is an easier way, Dave.

We know the (x,y) of both the ICBM and the ABM, one can use Bresenham’s line algorithm to guide it. And you’re 100% correct, alas we want a modicum of “realism”. You don’t think Stingers know the x,y(,z) of their target – do you? If only that were the case, we could save ourselves from unwanted inbound hypersonic missiles.

I particularly liked Andy McFadden’s commentary about lines

The missile trails aren’t plotted with the standard Bresenham line-drawing algorithm. Instead, the game computes the distance from the missile’s entry point to its target, then divides the X and Y components by the distance to get a fractional increment (held as a fixed-point signed 8.8 value). Every time the missile moves, the increments are added, and the new point is plotted. The resulting lines can look a little ragged.

The missile won’t necessarily strike the exact target, due to various inaccuracies in the math: the distance is calculated with an approximation; the distance is capped at 255; and minor errors in the fixed-point increment add up over time. The game handles this by advancing the missile until it moves past the target in the X or Y axis. This effect isn’t noticeable in-game, suggesting that “close enough” is fine for horseshoes, hand grenades, and nuclear weapons.

Andy McFadden

In my implementation, Bresenham’s is used for the CPU player. Consistent with the original, humans position the “x” crosshair where they want the missile to go to, and the ABM plots the path from base to the “x” arriving a second or so later. Quite cool? It is also how the ICBMs steer to their pre-decided (wave creation) target.

Implementing a Sensor

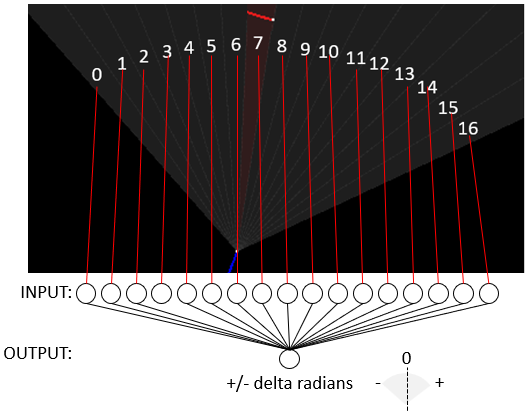

I know what an infrared sensor is but don’t ask me about the characteristics, how sensitive the detectors are, over what region they detect. I suspect if you put them next to each other the readings will overlap from the infrared source, so I’m sure it’s harder than it looks; add to it wind blowing the heat etc. We’ll therefore make a simple detection device, with 17 sensors – each able to detect if the ICBM is within their “triangle”.

The code for the sensors is below. The neural network isn’t WOPR from WarGames, each ABM has its own 17 sensors plus a tiny brain; once launched it is primed for a specific ICBM. Having something deciding to target such that it takes out multiple ICBMs is a fantasy in real life, so we don’t try.

We sweep from left to right, each “sensor” is a triangle with the ABM at the bottom. Using simple maths it determines if the ICBM is within the triangle setting it to a value that indicates how much left or right whilst also taking into consideration distance. And if it is not it puts “-2” as an output. The sensor works but is sub-optimal, please see the HITile post.

using MissileDefence.Configuration;

using System;

using System.Collections.Generic;

using System.Diagnostics;

using System.Drawing;

using System.Drawing.Drawing2D;

using System.Linq;

using System.Security.Cryptography;

using System.Text;

using System.Threading.Tasks;

namespace MissileDefence.Defenders.Sensors;

/// <summary>

/// An infra-red left..right sensor

/// </summary>

internal class IRSensorLeftRight

{

internal static float c_nolock = -2;

/// <summary>

/// true indicates ICBM is within the sensor.

/// </summary>

internal bool WithinCone = false;

/// <summary>

/// Points that make up the sensor sweep triangle. So we can plot it.

/// </summary>

private readonly List<PointF[]> heatSensorSweepTrianglePolygonsInDeviceCoordinates = new();

/// <summary>

/// Points that make up the sensor target triangle. So we can plot it.

/// </summary>

private readonly List<PointF[]> heatSensorTriangleTargetIsInPolygonsInDeviceCoordinates = new();

/// <summary>

/// Returns TRUE if the target is within the sweep.

/// </summary>

internal bool IsInSensorSweepTriangle

{

get

{

return heatSensorSweepTrianglePolygonsInDeviceCoordinates.Count > 0;

}

}

/// <summary>

/// Resets the heatmap debug images.

/// </summary>

internal void Clear()

{

heatSensorSweepTrianglePolygonsInDeviceCoordinates.Clear();

heatSensorTriangleTargetIsInPolygonsInDeviceCoordinates.Clear();

}

/// <summary>

/// Read the infrared sensor to detect a SINGLE "ICBM".

/// Whilst "we" know the location, the sensor plays dumb. I cannot simulate a real heat sensor despite

/// warming my GPU up running this code. What's important is that the neural network cannot cheat.

/// </summary>

/// <param name="angleCentreToSweep">Where the ABM is pointing</param>

/// <param name="objectMCLocation">Where the ABM is on screen.</param>

/// <param name="targetMCLocation">Where the ICBM is on screen.</param>

/// <param name="heatSensorRegionsOutput">Sensor output.</param>

/// <returns></returns>

internal double[] Read(double angleCentreToSweep, PointA objectMCLocation, PointA targetMCLocation, out double[] heatSensorRegionsOutput)

{

heatSensorTriangleTargetIsInPolygonsInDeviceCoordinates.Clear();

heatSensorSweepTrianglePolygonsInDeviceCoordinates.Clear();

WithinCone = false;

heatSensorRegionsOutput = new double[(int)Settings.s_sensor.SamplePoints];

// e.g

// input to the neural network

// _ \ | / _

// 0 1 2 3 4

//

double fieldOfVisionStartInDegrees = Settings.s_sensor.FieldOfVisionStartInDegrees;

// _ \ | / _

// 0 1 2 3 4

// [-] this

double sensorVisionAngleInDegrees = Settings.s_sensor.VisionAngleInDegrees;

// _ \ | / _

// 0 1 2 3 4

// ^ this

double sensorAngleToCheckInDegrees = fieldOfVisionStartInDegrees - sensorVisionAngleInDegrees / 2 + angleCentreToSweep;

// how far ahead we look. Given this could be diagonal across the screen, it needs to be sufficient

double DepthOfVisionInPixels = 700; // needs to cover whole screen during training

for (int LIDARangleIndex = 0; LIDARangleIndex < Settings.s_sensor.SamplePoints; LIDARangleIndex++)

{

// -45 0 45

// -90 _ \ | / _ 90 <-- relative to direction of missile, hence + angle missile 3is pointing

double LIDARangleToCheckInRadiansMin = MathUtils.DegreesInRadians(sensorAngleToCheckInDegrees);

double LIDARangleToCheckInRadiansMax = LIDARangleToCheckInRadiansMin + MathUtils.DegreesInRadians(sensorVisionAngleInDegrees);

/* p1 p2

* +--------+

* \ /

* \ / this is our imaginary "heat sensor" triangle

* \ /

* \/

* abmLocation

*/

PointA p1 = new((float)(Math.Sin(LIDARangleToCheckInRadiansMin) * DepthOfVisionInPixels + objectMCLocation.HorizontalInMissileCommandDisplayPX),

(float)(Math.Cos(LIDARangleToCheckInRadiansMin) * DepthOfVisionInPixels + objectMCLocation.AltitudeInMissileCommandDisplayPX));

PointA p2 = new((float)(Math.Sin(LIDARangleToCheckInRadiansMax) * DepthOfVisionInPixels + objectMCLocation.HorizontalInMissileCommandDisplayPX),

(float)(Math.Cos(LIDARangleToCheckInRadiansMax) * DepthOfVisionInPixels + objectMCLocation.AltitudeInMissileCommandDisplayPX));

heatSensorSweepTrianglePolygonsInDeviceCoordinates.Add(new PointF[] { objectMCLocation.MCCoordsToDeviceCoordinates(),

p1.MCCoordsToDeviceCoordinates(),

p2.MCCoordsToDeviceCoordinates() });

if (MathUtils.PtInTriangle(targetMCLocation, objectMCLocation, p1, p2))

{

WithinCone = true;

double mult;

if ((int)(Settings.s_sensor.SamplePoints - 1) / 2 == LIDARangleIndex)

{

// favour center

mult = 0;

}

else

{

double dist = MathUtils.DistanceBetweenTwoPoints(objectMCLocation, targetMCLocation);

// mult

// 0 = +2

// 1 = +1 }

// 2 => 0 } Sample Points = 5.

// 3 = +1 }

// 4 = +2

mult = Math.Abs(LIDARangleIndex - (Settings.s_sensor.SamplePoints - 1) / 2F);

mult /= (Settings.s_sensor.SamplePoints - 1) / 2F;

mult *= 220 / dist;

}

heatSensorTriangleTargetIsInPolygonsInDeviceCoordinates.Add(new PointF[] { objectMCLocation.MCCoordsToDeviceCoordinates(),

p1.MCCoordsToDeviceCoordinates(),

p2.MCCoordsToDeviceCoordinates()

});

heatSensorRegionsOutput[LIDARangleIndex] = mult;

}

else

{

heatSensorRegionsOutput[LIDARangleIndex] = c_nolock; // no target in this direction

}

// _ \ | / _ _ \ | / _

// 0 1 2 3 4 0 1 2 3 4

// [-] from this [-] to this

sensorAngleToCheckInDegrees += sensorVisionAngleInDegrees;

}

// return where we sensed the ICBM if present

return heatSensorRegionsOutput;

}

/// <summary>

/// Draws the full triangle sweep range.

/// +--------+

/// \ /

/// \ / this is our imaginary "heat sensor" triangle

/// \ /

/// \/

/// ABM

/// </summary>

/// <param name="g"></param>

/// <param name="triangleSweepColour"></param>

internal void DrawFullSweepOfHeatSensor(Graphics g, Color triangleSweepColour)

{

using SolidBrush brushOrange = new(triangleSweepColour);

foreach (PointF[] point in heatSensorSweepTrianglePolygonsInDeviceCoordinates) g.FillPolygon(brushOrange, point);

}

/// <summary>

/// Draws the region of the sweep that the target is in.

/// +---++---+

/// \ || /

/// \ || / hopefully the center strip

/// \||/

/// \/

/// ABM

/// </summary>

/// <param name="g"></param>

internal void DrawWhereTargetIsInRespectToSweepOfHeatSensor(Graphics g, SolidBrush sbColor)

{

// draw the heat sensor

foreach (PointF[] point in heatSensorTriangleTargetIsInPolygonsInDeviceCoordinates) g.FillPolygon(sbColor, point);

}

}

Logically (below) to hit ICBM #1 the ABM needs to steer right a little, and to hit ICBM #2 the ABM needs to steer left more than twice as much.

The ML has to learn to associate the illumination of a sensor with the amount of deflection required. The deflection has to be corrected throughout the flight until impact.

The code to guide the ABM (using the sensor) is below. Worth noting is that the speed is accelerating for the duration of the flight, and that sine and cosine are intentionally reversed because we want 0 degrees upwards:

/// <summary>

/// Moves the rocket (applying velocity, and acceleration via AI).

/// </summary>

internal override void GuideMissile()

{

burnForce = 5;

// OUTPUT: angle

double[] neuralNetworkInput = IRsensor.Read(AnglePointingDegrees, LocationInMCCoordinates, PointA.DeviceCoordinatesToMC(activeTarget.LocationInDeviceCoordinates), out double[] lastHeatSensorArray); // heat LIDAR input

LastHeatSensorOutput = new(lastHeatSensorArray);

// ask the neural to use the input and decide what to do with the rocket

OutputFromNeuralNetwork = NeuralNetwork.s_networks[Id].FeedForward(neuralNetworkInput); // process inputs

// adjustment of angle

OffsetAngleOfThrustInDegrees = OutputFromNeuralNetwork[0] * Settings.s_aI.AIthrusterNozzleAmplifier; //degrees

// stop it going too quick

if (Speed > 14) burnForce *= 0.9;

if (Speed > 16) burnForce *= 0.8;

Speed += burnForce * 0.003F;

angleABMIsPointing += OffsetAngleOfThrustInDegrees * 0.05;

angleABMIsPointing = MathUtils.Clamp360(angleABMIsPointing);

double angleInRadians = MathUtils.DegreesInRadians(angleABMIsPointing);

x += Math.Sin(angleInRadians) * Speed;

y += Math.Cos(angleInRadians) * Speed;

LocationInMCCoordinates = new PointA((int)x, (int)y);

if (EliminatedBecauseOf is null && !IRsensor.IsInSensorSweepTriangle)

{

EliminatedBecauseOf = "nolock"; // lost sight of target

}

}

Which ABM Silo engages the ICBM?

Clearly, one that isn’t destroyed, and has some remaining ABMs to fire. But which one do we choose?

Maybe if I took reinforced learning seriously and used ML from the graphics it could decide this itself. I think if I was going down the path of the computer deciding which, I would teach a separate neural network the task of knowing what to engage and learn “why”.

The current approach is to fire a missile from the closest silo.

Is this the best strategy? It depends and maybe were we to try thousands of games, we could answer this empirically…

- If you pick the closest, you are less likely to miss. It’s when the ICBM is dropped from a flier low down you have a problem.

- Conversely, if you were to balance the ABMs across silos (knowing that generally, it will hit the target regardless of which silo it launched from) you have less risk of running out of ABMs on the side you need to hit flier bombs.

- Maybe a hybrid. If the target is low down, fire from the closest else spread evenly…

It’s always a gamble as you never know where the next missiles are coming from…

The following MoveTrackBallAndFire() is the CPU controller. It walks down all the ICBMs in the wave, and if they are not eliminated, are on screen and no ABM launched already for it (tracking) then it launches the ABM from the closest silo.

foreach (ICBM icbm in wave.ICBMs)

{

// offscreen cannot be defended against, and we don't want to waste multiple against same target

if (icbm.IsEliminated ||

// fairness, imposed on user

icbm.LocationInDeviceCoordinates.Y > 372 ||

icbm.LocationInDeviceCoordinates.Y < 50 ||

icbm.LocationInDeviceCoordinates.X < 16 ||

icbm.LocationInDeviceCoordinates.X > 494 ||

AlreadyTracking(icbm)) continue;

ABMSilo? optimalABMBase = null;

float minDist = int.MaxValue;

foreach (ABMSilo abmBase in Silos)

{

if (abmBase.IsDestroyed || abmBase.ABMRemaining == 0) continue; // no missiles to fire

// find the closest.

PointF p = abmBase.LocationInMCCoordinates.MCCoordsToDeviceCoordinates();

float dist = MathUtils.DistanceBetweenTwoPoints(p, icbm.LocationInDeviceCoordinates);

if (dist < minDist)

{

minDist = dist;

optimalABMBase = abmBase;

}

}

if (optimalABMBase == null) continue; // no base has missiles.

listOfICBMsBeingAttacked.Add(icbm);

double launchAngle = ABMSilo.LaunchAngle(optimalABMBase, icbm);

int index = -1;

for (int slot = 0; slot < cpuControlledABMsIndexById.Count; slot++)

{

if (cpuControlledABMsIndexById[slot].IsEliminated)

{

index = slot;

break;

}

}

if (index == -1)

{

cpuControlledABMsIndexById.Add(cpuControlledABMsIndexById.Count, new(cpuControlledABMsIndexById.Count, optimalABMBase, icbm, launchAngle, SharedUX.s_uxColorsPerLevel[GameController.s_UXWaveTheme].ABMColour, CallbackIfHitTarget));

}

else

{

cpuControlledABMsIndexById[index] = new(index, optimalABMBase, icbm, launchAngle, SharedUX.s_uxColorsPerLevel[GameController.s_UXWaveTheme].ABMColour, CallbackIfHitTarget);

}

optimalABMBase.ABMRemaining--;

}

But how do we train it to target ICBMs?

That’s where I came a little unstuck.

Off the back of my success with cars that steer even when real physics are applied and tanks were happy – both used genetic mutation. I got a little ahead of myself.

Genetic mutation seemed like a sound idea. I just need to weed out “missiles” that “miss” from those that “hit”. Sounds like a plan? Well, no. It worked well enough in the end, but is a very poor approach as you’ll notice if you look at my “HITiles” post…

I started with 30 ABMs for each generation.

If you’ve never tried mutation as an approach the logic works like this:

- Make 30 neural networks with random bias + weightings

- While not bored

- Create a random target

- Create 30 ABMs each using 1 of the 30 “neural networks”

- Play the “game” (ABMs move based on their neural network output)

- Keep the 15 best (half of 30), we rank them by how close they were to the target

- Replace the 15 worst with a clone of the 15 best, but to get it to learn we “mutate” them slightly after cloning.

- Save the “neural networks”.

The theory behind it is that by discarding the worst, over time the best should get better. Often that is true.

In this case theory and practice appear to have different ideas of what should happen.

Here’s an example during training. You can see lots of different coloured missiles all doing their own thing – because they are all attached to a different neural network.

Impressive as the banana shots are, they leave me feeling the neural networks are not very smart, although the target is moving they should orient themselves sooner and head straight-ish.

I also find the mutation thing annoying to watch. Let’s say you have 30 missiles, and you discard 15 (50% worst) the new cohorts (cloned off the best) can end up even worse than the one they replace. Why? Because the mutation is modifying neurons at random adjusting weight and bias; that change could be also detrimental in the same way if we cloned you, and changed something that enables the heart to form correctly.

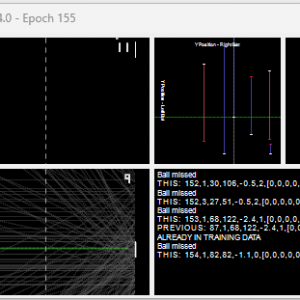

Although you can’t see above, the screen is split into 2. On the human player’s window (they cannot play whilst training) it outputs debug telemetry as shown below.

The top is accuracy, how many get mutated each time, which generation and the number of the training data point. Beneath that are the statistics for those neural networks. It’s useful to see progress.

A number of keys can be used during training, “S” turns everything into slow motion, CTRL-S saves the model (don’t forget to do this after sufficient training!). “Q” makes it train super speedy. There are also cool things like “sensor cone” and “heat sensor” so you can watch it targeting.

Please note: training mode appears when it fails (intentionally or not) to find the .ai training files. Temporarily remove them if you want this to see it happen.

In the main 2/3rds is one line per neural network. The “x” indicates the position of the ICBM within the 17 sensors; NN is the neural network, “gim”: is the gimbal (angle used in thrust vectoring) and the last bit calls out the result (hit/nolock).

Observations & Lessons

Behind every success is a lot of failures, and this didn’t work the first time. It took a lot of learning and experimentation. The finished game was worth it.

Inputs to Neural Network

- It’s easy to not fully understand which inputs help solve the problem, even now the use of distance and deflection size hampers rather than helps. More is not necessarily better.

- You must ensure the data you feed into ML is correct, and that the output is interpreted correctly.

Neural Network Configuration

- I tried with lots of neurons thinking more is better; it isn’t necessarily the case. I tweaked layers, and it didn’t learn quicker or be better for having lots of hidden neurons or layers

Neural Network Mutation

- The mutation is hampered by the random seeding of bias/weights and there is an element of “luck” that you happen upon an optimum neural network from which to clone. Training would sometimes get stuck with a low 40% accuracy.

- I chose 30 missiles, where 15 are kept and a similar number “cloned and mutated”. The lower the number the higher chance good ones will get mutated and made worse. If I had used 300, and just one repeatedly hit the target then each mutation would copy that behaviour resulting in better or worse, but without destroying the one that previously worked; eventually one will be found that beats the best. It is necessarily practical if you’re doing it visually (300 missiles might chug along slowly).

Neural Network Training Data

- The “random” training data that I initially used failed to yield the correct result in a time-efficient manner. We’re trying to solve a very specific behaviour. It isn’t helped if by training it to “aim left” you then also expect it to “aim right”. It ends up favouring left-aiming missiles that don’t stand a chance when you change the objective to right. After hours spent trying to come up with training data that prevents it from specialising, I opted on forcing it to alternate sides each generation, as well as high and low.

Fitness and Scoring

- Of the top X neural networks, is one always better than the other? Do you copy the best one to all missiles for the actual game? In fact during mutation how do you judge, missile 1 might have been better for 25 goes, but got beat by missile 2 this time. I use running totals to try to handle this. I’ve reduced the %age that mutates (making it to be less likely to be discarded).

- Scoring the “fitness” is key. Deciding whether to retain a network that worked for more historical runs but failed this time is most definitely something to think about carefully otherwise you discard a perfectly good network for one bad run, even if it worked flawlessly for the prior 100. This is a big deal because the one that replaced it might be a 1 hit scenario.

Throughout my apps you’ll notice that I tend to implement functionality to “Pause” and “Slow” making it easier to see what’s happening. I regularly include debug options such as enabling a visual for the sensor. I’ve made sensors before that didn’t quite work as I intended. With visual confirmation, you are more likely to succeed, plus it can look quite cool!

If you have a Windows PC, go download it, compile and play!

Missile Command was a trademark of Atari; the rights to their software I believe is currently with “Warner Bros. Interactive Entertainment.”. There is no intent by the author to infringe or violate their rights with this posting, or by the ML version in C#.