Conclusion

No balls or stick persons were harmed making this article, as far as I can tell. There were times I wished I could.

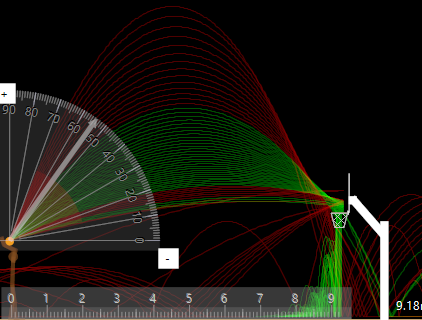

It works. Abcde is smart enough to throw the ball from anywhere and will kick your butt. Non-savants cannot compete. The relationship is not simple, competing is a “remember” game.

A 5x5x5 hidden neural network won’t solve it for all distances/angles, or at least not within any reasonable timeframe. The big question is why? Does it need more layers, or more neurons in general?

8x8x8 hidden layers hit 98.96% then got stuck there… But sometimes with these things, it’s the luck of the random weightings. 10x7x3 works too. But none of them ever seem to reach 100%. This project reminded me of the importance of consistent data – to begin with, I trained including bounces, which took more effort to converge.

That’s despite ADAM, Cosine Annealing and SELU. If you are unsure what these are and why, see my previous post about temperature prediction in Kyiv.

If we aimed to fetch a force for an integer distance/angle, AI seems a dumb way to do it. It generates all the answers in the training data! Cache the training data and look up the force (that’s what it does to train).

Whilst a lookup table works, what if we want to know within 0.25m accuracy?

Given that integer distances require 1882 training rows, you only need to store 4x that, nothing of concern with modern computers.

At 0.1m accuracy, it requires 19,000 data points, again hardly of concern. Now let’s make the angles accurate in 0.1 degrees increment, which requires 190,000. I still would not baulk at making a mapping table of it. I would happily create a 3GB mapping if accuracy is important (and hardware isn’t constrained), _if_ there wasn’t a better way. A lookup to get an accurate answer is better than a “prediction” (unless the prediction is a formula known to be correct for all inputs).

The problem with a lookup table is if you are asked for an angle or distance that is not in the lookup table.

You can’t pick two values and average, as the force isn’t linear. In circumstances like this, having a formula from learning the relationship is attractive. It is also more practical for low-end / embedded devices. For example, if it were a robot using an Arduino microcontroller, it can’t store a 3GB mapping table.

It’s worth questioning the training data size concerning accuracy.

We can train a neural network with 5-degree accuracy and 10m distance. It still works (just 42 data points), but not without an unfortunate downside. It trains 100% but some angles for distances won’t work because the formula it derives isn’t quite accurate enough.

Whilst it might be cool to achieve 100%, that means 100% for the accuracy of the data points trained on, not data points in between, as is evident above.

An 8-8-8 neural network occupies a mere 19KB for ALL angles/distances – far more practical. If that needed to be shrunk we could convert the JSON text into binary etc.

You can find the source code for this on GitHub.

I hope it piqued your interest. If so, please leave a comment.